-

Notifications

You must be signed in to change notification settings - Fork 59

How to Normal Detail Bump Derivative Map, why Mikkelsen is slightly wrong and why you should give up on calculating per vertex tangents

DISCLAIMER: Due to lack of time, the final part of this article is not finished. I initially planned on providing the proof in markdown, however LaTeX is needed and it will take me quite a few weekends to write it up in a form presentable to others. The main idea of the proof is to show that for a specific triangle approximating a surface with gaussian curvature != 0, its impossible to pick a set of per-vertex tangent vectors of unit length that interpolate orthogonally to interpolated normal and do not have a 0-length interpolation singularity.

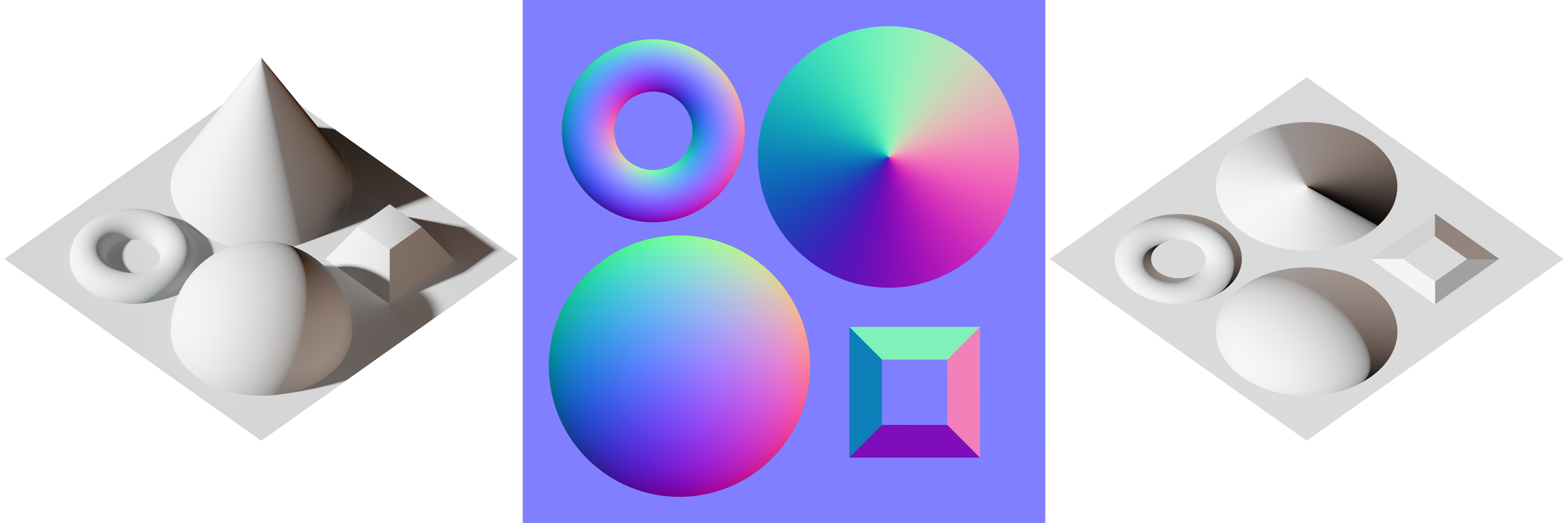

They are all techniques to perturb the pixel's Normal vector resulting from your surface parametrization, which in the simplest case would just be the perpendicular to the triangle being rasterized. But usually this would be the interpolated smooth normal from the normals specified at vertices.

Here is a useful illustration of interpolated normals from Scratchapixel

More info at: https://www.scratchapixel.com/lessons/3d-basic-rendering/introduction-to-shading/shading-normals

Now the techniques in the title of this wiki page deal with perturbing that smooth normal (without adding more geometry) to simulate far more geometric detail than possible with triangles. Some of the reasons why its not possible with triangles are:

- Performance degradation (Millions of Triangles needed, most triangles smaller than 2x2 pixels pay full cost for shading)

- Normal precision issues (Float32 limitations on how small triangles can be)

- Aliasing (small triangles cause subpixel errors)

The main aim of these techniques is to affect the lighting, not the geometry of the surface. In fact, if your scene has no dynamic lighting you won't see normal perturbation at all.

All of these methods work on the assumption that the surface is almost flat (so the parallax effect is negligible), and that the normal deviation is produced due to very small facets. Good surfaces for this sort of approximation are; woven fabrics, tiles with grout, small wrinkles in leather and skin, grooves and patterns on furniture. The definition of "almost flat" depends on the distance from which the object will be viewed. For example, at far away distances, you may get away with bump mapping instead of parallax mapping for a wall.

I assume you are familiar with the terms: Surface Normal, Vertex Attribute Interpolation, View Space, World Space, Object/Model Space.

At your point that you wish to perturb, you have a Tangent Space, a coordinate system with an orthonormal basis, tangent to your surface as the name implies.

The overwhelming convention is to say that the surface normal is the local Z+ axis. The remaining two basis vectors for the local X and Y are called tangent and bitangent (sometimes called cotangent), which should really be orthonormal to each other and the normal (which often are not due to bad algorithms in bakers and engines which calculate these on meshes).

This tangent space basis MUST be orthonormal for classical normal-mapping shaders which transform the light into tangent space!!!

Otherwise the value of the normal and light-vector dot product will change anisotropically.

Now if the unperturbed surface normal is (0,0,1) locally, we can define an inverse transform of any perturbed normal to an Xspace where the X can be View,World or Object space:

vec3 perturbedInXSpaceCoords = mat3(tangentInXSpaceCoord,bitangentInXSpaceCoord,normalInXSpaceCoord)*locallyPerturbedNormal;Note: By default GLSL matrix constructor takes columns.

Naturally we see that the coordinate (0,0,1) will select the last column of the matrix.

Bonus: Due to having an orthonormal basis we can transform Xspace vectors into tangent space by simply using the transpose matrix (tangent bases in rows).

THE IMPORTANT PART: The choice of tangent and bitangent is not arbitrary, these Xspace vectors have to actually be aligned with the (1,0) and the (0,1) direction in your texture coordinates in the surface! Otherwise the normals in the normal map (not the normal map itself) would be "arbitrarily" rotated and you'd see things like roof shingles lit from the wrong side.

Now what differs between these normal mapping techniques is:

- How that tangentSpace to XSpace transform is expressed (sometimes the matrix multiply gets optimized out)

- How the perturbedNormal is obtained

- How mipmapping and interpolation influences the results

The last point is actually interesting because all of the methods store exactly the same amount of information, and can be converted between each other losslessly given enough storage precision, and in cases involving Dot3 Tangent Space Normalmapping, a consistent choice of tangent space. The only thing that changes is the interpolated values resulting from texture filtering.

In this image from wikipedia, the bump map is the middle one, it could essentially be identical to a heightmap used for parallax,parallax occlusion or displacement mapping which are related but separate effects.

Essentially a derivative of this heightfield is calculated at runtime from the bump map using pixel differences. The theory behind it is identical to Dot3 Normal Mapping and Derivative Mapping although in the presence of shared vertices and mipmapping the results are VASTLY different.

Note that recently usually people used dFdx and dFdy (ddx and ddy in HLSL) to get the differences between bump-map values in screen-space, but that was rather messy as the denominator would depend on the perspective stretching of the rasterized triangle and the difference would be "one-sided" and stay they same in a 2x2 pixel block. Some people fixed that last issue with using ddx_fine in HLSL 5.0, but most people (including Unreal Engine) just did "the right thing"(TM) and actually sampled the bump map 3 or more times per pixel to get the derivatives in texture space.

You can actually convert a bump map to a (signed) tangent-space normal map designed for a plane fairly easily:

vec3 alongX = vec3(1,0,(bumpMap(u+texStride,v)-bumpMap(u,v))*bumpiness/texStride);

vec3 alongY = vec3(0,1,(bumpMap(u,v+texStride)-bumpMap(u,v))*bumpiness/texStride);

tangentSpaceSignedNormalMap(u,v) = normalize(cross(alongX,alongY));Its a bit more complicated with atlases and unwrapped meshes to compensate for triangle borders and surface warping.

Going back the other way is more complicated, it requires solving a poisson equation (from gradient field to original function) or an inverse of the really large sparse matrix equivalent to the above "kernel", but it can be done. (I've done it once through an FFT and an iFFT with frequency space multiplication of the inverse of the kernel, the ringing was baaad)

Greatest advantage of the bump map method is ability to mix bump maps intuitively.

Of this there are two major variants, object space and tangent space. Tangent Space Normal mapping is what is usually meant when one sees or hears "Normal Mapping" or the misused "Bump Mapping", its by far the longest used and most popular technique in this century's video games.

In this image from wikipedia, the normal map is the middle one. It encodes the artificial normal deviation from the normal of the surface being modified (taken to be Z+), most of the time its encoded in the RGB channels of the texture, sometimes it can be re-parametrized to optimize for blending (mipmapping) more gracefully or storage and have its Z component dropped (which can be reconstructed as sqrt(1-x*x-y*y)).

The way it works is you provide it with a tangent space (more on that later), which is a kind-of coordinate system aligned to the surface of your triangle. I say kind-of because it deals only with the rotation/orientation, not with translation or scaling.

The way you get a view-space normal is by applying 3x3 matrices

ViewNormalMat*WorldNormalMat*LocalTangentSpaceNormalMat*normalMapValue

for world-space and object-space hack off 1 and 2 transforms off the front respectively.

The whole sequence of ViewNormalMat*WorldNormalMat*LocalTangentSpaceNormalMat (or whatever length daisy chains of transforms) can be precomputed into one matrix which nicely enough (in theory) has its columns correspond to the per-triangle (actually per-vertex) normal, tangent and bitangent.

This one is dead simple, store object-space (a.k.a. model-space) normal XYZ in signed RGB texture and just sample that and forget about interpolating the normal from the vertex shader to the pixel shader. Works wonderfully, no seams with shared vertices, no extra tangent space per-vertex attribute and so on.

Limitations:

- Simple detailmapping is impossible/very hard

- Pixel shader actually doesn't get much faster if you want view-space normals, you still need a mat3 transform

- If your mesh doesn't have unique UVs resultant from unwrapping (box with same texture on all sides) and has a lot of repetitive texturing (long curved wall of tiles) then you will need to waste a lot of texture space

- Doesn't work with animation, skinning or deformation

Obviously simple blending is impossible here, but you could treat one normal map applied on another as the perturbation of the previous.

I will only cover the case of tangent space normal mapping here, similar logic can be applied to detail mapping an object space normal map with a tangent space one on top. SIDENOTE: This is especially important (and unrelated to the choice of normal perturbation method) for blending in decals into the GBuffer!!!

Most people cite "Reoriented Normal Maps" with reverence and a hint of dark vodoo magic, but anyone with half a brain sees that simple lerping of two Dot3 normal maps (tangent or object) won't work for the same reason Goraud Shading looks bad and the same reason you need to normalize your normal in the pixel shader when rendering smooth objects.

The idea is that you can construct another tangent space for your pixel in the previous normal map, and use that tangent space to rotate (reorient) the current normal map's normal.

This is easy if the UV coordinates for sampling the two normal are the same (except for scaling) as you can simply calculate the tangent of the pixel in the normal map as cross(vec3(0,1,0),prevNormalMapVal).

At a high level what is happening is:

ViewNormalMat*WorldNormalMat*LocalTriangleTangentSpaceMat*TSpaceFromNMap(nmap0value)* ... TSpaceFromNMap(nmapjvalue)* ... nmapNvalue

for N normal maps concatenated together.

Note: Order of concatenation matters, these are matrix transforms so it matters if you are putting a rust facet on a metal panel facet or a metal panel facet on a rust facet.

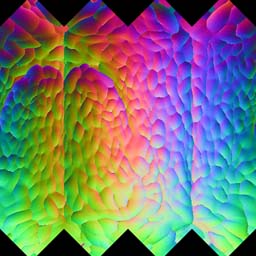

We actually apply a static small surface wrinkle detail normal map onto our dynamic FFT generated normal map for water rendering in BaW.

So I guess because I'm too lazy to layout the math for rotating the normals when different UV coordinates are used, so I'll link to "Reoriented Normal Maps" as well https://colinbarrebrisebois.com/2012/07/17/blending-normal-maps/#more-443

Its hard to tell apart a Z-dropped compressed tangent space normal map from a derivative map, you may even be fooled that they are interchangeable (and they're not).

Don't get fooled by the marketing hype, its just plain old Bump Maps with the differencing operator precomputed (so only the Z component of alongX and alongY gets stored).

They have been invented before and have gone out of fashion some time ago, only to reappear again.

I initially thought it was just a bump map shader optimization, but I was made aware by one blog post that the bilinear filtering leads to problems in the differencing operator applied to a bump-map.

The main problem is that the height texture is using bilinear filtering, so the gradient between any two texels is constant. This causes large blocks to become very obvious when up close. Obviously linear interpolation (sawtooth hat function) is a C0 function, which can only be differentiated once (except at bends), so our derivative of the linearly interpolated bump map will be rather discontinuous.

Derivative Maps store the gradient already, and that is being interpolated giving us a C0 approximation to the derivatives, which solves a large blocky headache up close.

These things also blend nicely thanks to the fact that derivation of a sum is distributive! With enough precision (no clipping at 1.0) blending two resultant derivative maps is exactly the same as a derivative map made from two blended heightmaps.

The only downside is, you need to know your maximum possible derivative magnitude ahead of time and scale the derivatives by that, and store that scaling factor too!

Its actually due to discontinuities in your tangent space!

These discontinuities result from the need for the vertex tangent to always stay orthogonal to the vertex normal and the vertex normal being discontinuous.

And normal discontinuities are necessary for accurate low-poly models for sharp edges like walls, boxes, etc.

The problem arises from texture filtering and the way the particular UV unwrapping is laid out, a shared triangle edge in an unwrapping straddles some pixels belonging to another triangle or the background, so when doing bilinear filtering its mixing in some values from the other triangle or background at the edges.

An Example I drew, a flat (non-smooth) normal corner is used together with a tangent space normal map to approximate a bevel present in the high-poly geometry.

Try to figure out what the corner perturbed normal value resulting from sampling the tangent space normal map texture will be!

The issue is that different tangent spaces provide different texture colours for the same normal, which gets averaged and then neither triangle gets the edge value right.

The good news is that bump-mapping and derivative mapping does not exhibit these problems as the values stored and interpolated are the distance to the original high-poly sample surface and the difference in the neighbouring distances respectively. These distances are very, very likely to be identical when moving over a shared triangle edge in the texture.

A Tangent Space Dot3 Normal map uses the underlying low-poly mesh tangent frame in the reorientation of the sampled normal and production of the normal map pixel, which is what produces discontinuities in the texture and why sampling the wrong pixel matters.

If you do some more thinking (or keep on reading) you will see that a triangle border of 1 or even 16 pixels won't actually solve the problem (due to mipmapping and anisotropic filtering).

Essentially fear-mongering, with regards to whether you do calculate your tangent space with normalized (made into unit length) normal and tangent. https://wiki.blender.org/index.php/Dev:Shading/Tangent_Space_Normal_Maps

However, in the pixel shader the "unnormalized" and interpolated vertex normal and tangent are used to decode the tangent space normal. The bitangent is constructed here to avoid the use of an additional interpolater and again is NOT normalized.

The normalization before or after really doesn't matter (your normal map is and should be unit length vector after decompression/dequantization).

Cross product is not a dot product, it's magnitude has no bearing on the result of the bitangent/cotangent calculation, especially if we normalize straight after. The cross product gives us a vector perpendicular to two input vectors, I could literally scale the two inputs by arbitrary positive-valued noise and still get the same answer after normalization.

Remember distributivity over scalar multiplication ?

Normalization introduces a divide by the magnitude

It also mentions how computing the bitangent (a.k.a. cotangent) "is constructed here to avoid the use of an additional interpolater", not only is it an optimization but its also necessary for correctness! But more on that later...

However the previous sentence in that paragraph is 100% correct.

will keep the vertex normal and the vertex tangent normalized in the vertex shader.

If the vertex shader output orientations (normal, tangent, light vector, half vector etc.) are not unit length then pixel shader interpolated inputs will be vastly off the supposed value, it will compute a wrong weighted average.

But overall this convention is not wrong in itself.

However none of this matters much as the idea of per-vertex tangent vectors falls apart with some common smoothed normal geometry.

I've proven that 3 sets of 2 perpendicular vectors defined at vertices interpolated barycentric-ally across a triangle will fail to stay orthogonal to each other under some corner case conditions:

- The vertex normals are smoothed and not orthogonal to triangle edges (this is not the same as shared/indexed vertices)

- Tangent vectors are not identical at the triangle vertices (smooth cylinder approximation actually works)

You can have unique vertices with different tangent spaces, but the fact that you have a smooth group matters - same normal at the same position.

MikkTSpace seems to split vertices depending on some angle threshold of difference between tangent vectors (I say seems because I have not had the time to read his thesis thoroughly or the entire source of mikktspace.c), however splitting vertices brings a whole set of problems the least of which are the bandwidth and cache penalties. These problems are exactly the reason why I advocate you throw Dot3 Tangent Space Normal Mapping to the dustbin of history and I'll get to them in the last subsection.

Actually what mikkTSpace does is irrelevant, as I will prove that for certain geometry no per-vertex tangent precomputation algorithm can exist that will produce interpolated tangents which will stay orthogonal to interpolated normals.

So essentially whether you split or share vertices, smooth or facet, you're screwed either way.

Obviously this problem is not applicable to flat shaded triangles where the vertex normals are actually colinear with the physical triangle plane normal AND orthogonal to all triangle edges.

The optimization to avoid the interpolator is actually necessary, as the interpolated bitangent from vertex bitangents will not be able to stay orthogonal to either the tangent or the normal!

In order for tangent space normal mapping to make sense, we need to ensure that the interpolated tangent vector is orthogonal to the interpolated normal vector. So what the renderer (output shader) and the sampler (baker) are missing is to allow for a correction to the interpolated tangent vector in the pixel shader, before the bitangent calculation.

tangent = tangent-normal*dot(normal,tangent);We could apply some other correction.

Now this means that the bakers which implement normal map baking assuming mikkTSpace tangent spaces, would need that step applied too in reverse and you need to re-bake all your normal maps 🦆

All 3 of the available options to "solve" the problems of tangent space normal mapping, each of them while solving one problem makes another worse, even when some applied together with others.

We can calculate (next section) per pixel tangent frames with the derivative instructions, and these frames will always be orthonormal.

The baker would of course need to perform this transformation per-pixel of the normal map, in reverse using the low-poly mesh when transforming the sampled normals of the high-poly mesh.

However this does not solve the problem of lighting seams, and could in-fact make them worse as this method doesn't even allow you to share the tangent vector between vertices to ensure continuity.

Adding triangle borders is essentially adding Option 3 on top of option 1.

Having smooth vertex normals on surfaces which are supposed to be smooth is necessary and very useful because as we zoom out and away from the mesh the normal/bump/derivative map will tend towards identity (no perturbation) thanks to texture filtering.

If the underlying triangle faces are faceted then we are presented with the problem of huge change (degradation) in lighting quality especially on things that are supposed to be smooth (trees, statues, characters).

The following are not arguments and solutions to this problem:

- Simply drawing a smooth mesh without normal maps at a certain distance (abrupt change in lighting)

- Custom Mip Map Generation algorithm (mip map size decreases at a certain point its impossible to compensate for the would-be triangle curvature as bilinear!=phong interpolation).

You also can't smooth everything for the same reason, as a nicely normalmapped cube will turn into a mess at a distance if it is smoothed. You will also have problems if you smooth everything because you are guaranteed a non orthogonal tangent without pixel-shader correction or implicit generation.

Rendering Professionals cringe at the thought of un-indexed meshes and paying 2-3x more bandwidth for the same mesh, effectively rendering the post and pre vertex transform caches useless.

We cringe especially when we think about skinned meshes which really benefit from not running the vertex shader 3 times per every triangle.

Triangle borders a waste of texture space, which with fixed asset texture sizes, translates into less detail and resolution.

Also triangle borders will not work 100% with mipmapping and trilinear filtering, as anyone who has tried atlasing will attest (the reason why minecraft is pixelated 👎 isn't art-style, its hardware limitations).

The reason is that at minification levels the triangle borders in each mip-map level would have to be 1 pixel wide to prevent leaking from the outside, this is impossible with a full mip-chain as the top mipmap level would have to be larger than 1x1.

Also a 1 pixel border in mipmap level 4 is a 16 pixel border in the base mip map level 0, so really the border would have to be as large as the triangle itself for this whole thing to work.

If calculating the tangent vector per-vertex is flawed and needs a per-pixel correction, or a splitting of merged vertices, then if we could do the whole thing in the pixel shader, why shouldn't we?

The only Object Space Method is Dot3 Object Space Normal Mapping! The rest are Tangent Space!

- No Edge Tangent Space Lighting Seams (unlike Dot3 Tangent Space)

- Same compressed texture cost as Dot3 Normal Maps

- Derivative Maps compress better with DXT etc.

- Don't have to calculate and pass per vertex tangent attribute (can be calculated implicitly)

- Better deformation support than Dot3 Tangent Space

- Precomputed derivatives means less pixel sampling compared to Bump Mapping

- No blocky artefacts on magnification compared to Bump Mapping

- Next to ZERO Tangent Space Lighting Seams (unlike Dot3 TS)

- Smaller memory size for the texture

- Better deformation support than Dot3 Tangent Space (as good as derivative)

- Don't have to calculate and pass per vertex tangent attribute (can be calculated implicitly)

- No Tangent Space Lighting Seams AT ALL!

- ABSOLUTELY NO tangent space calculation (explicit or implicit)

- Most accurate perturbed normals

![(\exists , \mathbf{N}_1, \mathbf{N}_2, \mathbf{N}_3 \in \mathbb{R}^3 \setminus {(0,0,0)} )(\forall , \mathbf{T}_1, \mathbf{T}_2, \mathbf{T}_3 \in { \mathbb{R}^3 \setminus {(0,0,0)} : \mathbf{N}_i \cdot \mathbf{T}_i = 0 )} ) (\exists , c_1,c_2 \in [0,1] ) (\mathbf{\widetilde{N}} \cdot \mathbf{\widetilde{T}} \neq 0)](https://github.com/buildaworldnet/IrrlichtBAW/raw/master/site_media/wiki/normals/ExistsATriangleWithNoAlwaysValidTangents.gif)

Where

And where c are barycentric coordinates, with a constraint

Essentially what this says is that there exists a triangle with valid (non zero length and orthogonal) vertex normals and for that triangle, any choice of valid vertex tangents will fail to keep interpolated normal and tangent orthogonal at one or more points.

This is the most generalized way of putting it, leaving your UV unwrapping/mapping arbitrary and not placing any constraints on the length of the individual normals and tangents.

By an educated guess I pick an example triangle which makes my job easier.

Let the vertex data be (in pseudo-code)

Vertex v1,v2,v3;

v1.normal = (1,0,0); // N_1

v2.normal = (0,1,0); // N_2

v3.normal = (0,0,1); // N_3We leave the choice of UV coordinates and position arbitrary.

You could let the position equal the normal and take this triangle to be part of a octahedron approximation of a sphere (with smoothed normals), this is actually how you'd get to corollary that any smoothed-normal mesh approximation of a sphere with valid per-vertex tangents will have non-orthogonal normal-tangent interpolation singularities.

This choice of triangle normals makes the proof easier as we can already see that

As previously noted the interpolated normal and tangent need to stay orthogonal to each other. Before we tackle the entire triangle, including the interior, let us consider this constraint along triangle edges.

Let's consider the edge from v1 to v2 where logically c_3 = 0.

The computation of constraints resulting from the condition on edges v2<->v3 and v3<->v1 are left as an exercise to the reader.

After considering all 3 edges we have

We can rename and drop the suffices from our suffixed x,y,z as they are unique

We can actually recognise the tangent interpolation for what it is, matrix multiplication with the vector of barycentrics where each matrix column is a subsequent vertex tangent.

Now we can finally express what our condition of orthogonality over the interior of the triangle means.

However there's not much we can do with that.

There's one final constraint to mention, the interpolated tangent cannot be 0 for any valid choice of barycentric coords.

This leads to the question "whats the nullspace of M" ?

By doing a simple triple product of the per-vertex tangents we see that the matrix has a determinant of 0.

A subspace of this nullspace actually lies along the line from (z,x,y) to the origin (trivial).

Finally if we pick point with barycentric coordinate c = (z,x,y)*1.0/(x+y+z) the tangent interpolation fails.

Hence if x>0 then either y<0 or z<0.

Sidenote1: If we required for vertex tangents to be compressible (assume length 1) then the choice of tangents would be severly limited, also constraining the choice of the triangle orientation and aspect ratio in UV-space. In our example triangle's case there would be either 2 or 4 orientations. Sidenote2: If you take the proof a little further you could most probably prove that you'd have to waste a lot of texture space (tangent constaints constrain uv coords too!) and tessellate heavily to avoid a faulty (either of length 0 or non-orthogonal to the interpolated normal) interpolated tangent on a surface of an ico-sphere. And possibly also that the largest shared UV island size is limited.