-

Notifications

You must be signed in to change notification settings - Fork 9.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Puppeteer slow execution on Cloud Functions #3120

Comments

|

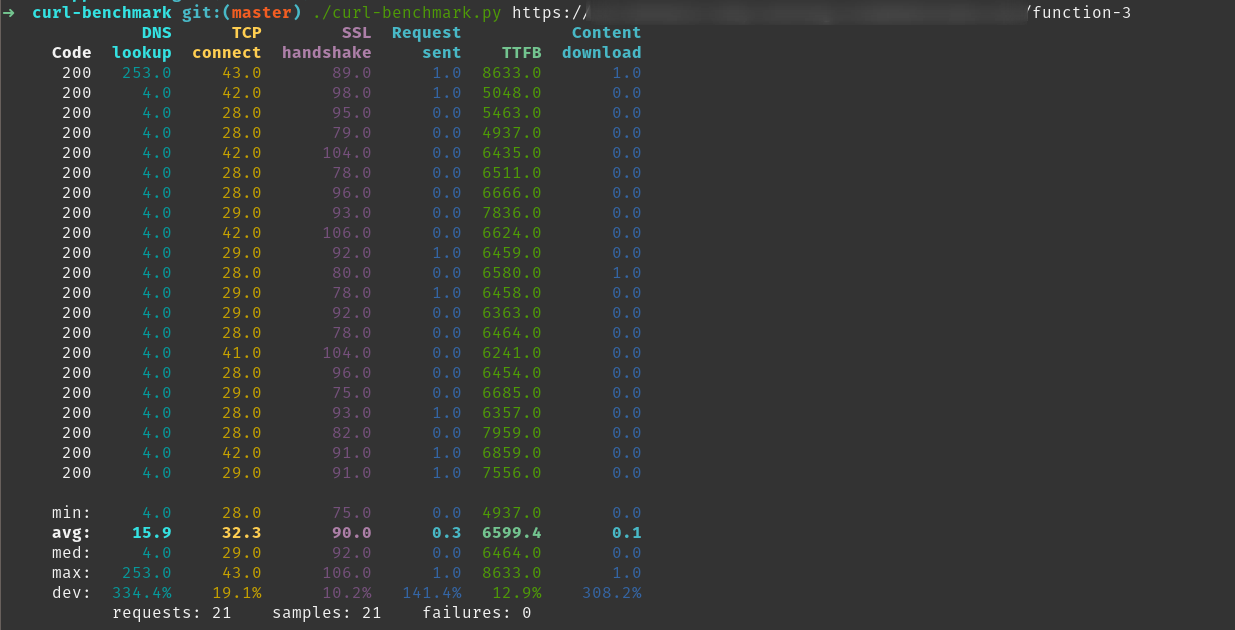

I have added some probe to measure operations time with Here are the results for a local invocation (served by

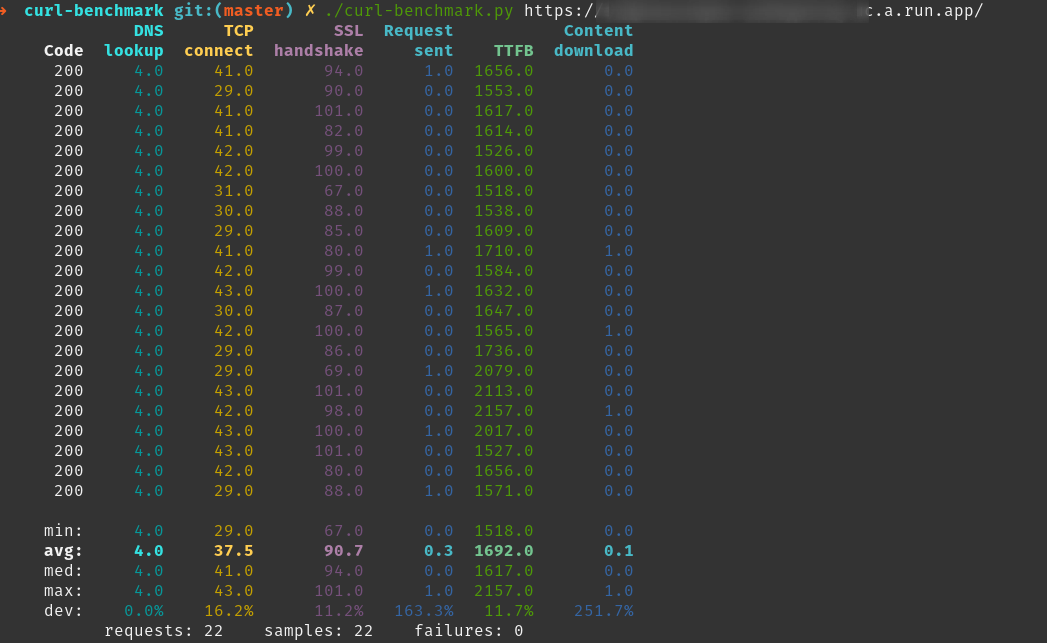

The same for an invocation on Cloud Functions:

if I compare both:

I can understand why the launch is slower on Cloud Functions, even after multiple runs since the hardware is quite different from a middle-class desktop computer. However, what about time differences for |

|

@ebidel I saw you have written some experiments for Puppeter on Cloud Functions recently. Did you experience the same behaviour? do you have an idea about what could explain such a difference? I noticed your nice try Puppeteer example deployed using a custom Docker environment does not suffer from this issue. Taking a screenshot requires about 2 seconds only, as for my local environment. |

|

I have similar results on GCF. Most of the slowdown seems to happen on BTW, things I tried;

Maybe GCF CPU allocation is this bad. That would require benchmarking other stuff. |

|

@eknkc Thanks for sharing your experiments. Here are the options I tried too. None are helping: As a quick test, I switched the function memory allocation to 1GB from 2GB. Based on the pricing documentation, this moves the CPU allocation to 1.4 GHz from 2.4 GHz. Using 1GB function, taking a simple screenshot on Cloud Functions takes about 8s! The time increase seems to be a direct function of the CPU allocation :x Maybe there is a magic option to get better timing and have Puppeteer really usable on production with Cloud Functions? |

|

Thanks for the report. I've passed this info off to the Cloud team since it's really their bug. There's a known bug with GCF atm where the first few requests always hit cold starts. That could be causing a lot of the slowdown. But generally, GCF does not have the same performance characteristics that something like App Engine Standard or Flex have (my try puppeteer demo). Since you can only change the memory class, that also limits headless Chrome. Another optimization is to launch chrome once and reuse it across requests. See the snippet from the blog post: https://cloud.google.com/blog/products/gcp/introducing-headless-chrome-support-in-cloud-functions-and-app-engine |

|

@ebidel Thanks. Is there a public link for the issue so that I can track the progress/discussion? |

|

Unfortunately, not one I'm aware of. Will post updates here when I hear something. |

|

OK. Thanks :) |

|

Currently, there's a read-only filesystem is place that's hurting the performance based on our tests. The cloud team is working on optimizations to make things faster. Another thing to try is to bundle your file so large loading deps are reduced e.g. |

|

Thanks @ebidel for the investigation. Speaking for my case though, the performance issue is not related to the startup but rather happen during runtime so inlining would not change that I assume? It seems like the chrome instance that is already running struggles with large viewports or simply capturing the page. That operation happens to use a lot of shared memory which might be causing the issue. Anyway, hope we can have it resolved. Thanks again. |

|

That's been my experience as well. Capturing full page screenshots, on large viewports, at DPR > 1 is intensive. It appears to be especially bad on Linux: #736 |

|

This combination improves a little the speed: I'm getting loading times of 3 seconds in local and 13 seconds in GCF. |

|

I guess some improvements are made, I don't see long waiting times. I did use @wiliam-paradox options though. |

|

Experiencing this slowness as well. Anyone have suggestions on what to do to boost the speed in GCF? Local runs under 500ms, while when deployed to GCF takes 8-12 seconds. |

|

I'm experiencing the same but at AWS Lambda, where requests are reaching the timeout while the same requests from my local are fine and under expected time. |

|

Are you guys running puppeteer in HEADFUL mode on Cloud Functions? Running in headless mode is working fine but I need to run headful to be able to download PDF files. =/ |

I'm trying to launch chrome just once exactly the way on snippet but I'm getting |

|

@joelgriffith The reported issue is not about Chrome startup time but the full execution time. So sad to write promotional messages without even reading the issue purpose. |

|

Any update on this? GCF is executing any given Puppeteer action at perhaps 25% ~ 50% of my local desktop speed. |

|

@DimaFromCanada none that I've seen. To be clear, are you talking about total time (cold start + execution) or just running your handler code? |

|

Cold start adds time of course, but I'm talking about handler code running

very slowly as well. Particularly page.goto() calls.

Compared to a local machine or a VPS, I'm finding Puppeteer on GCF to be 2x

- 4x slower.

…On Fri, Sep 28, 2018 at 1:00 AM Eric Bidelman ***@***.***> wrote:

@DimaFromCanada <https://github.com/DimaFromCanada> none that I've seen.

To be clear, are you talking about total time (cold start + execution) or

just running your handler code?

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#3120 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AK5OkHJU8n7tNz8OaVNbLhAI2UJOm3ATks5ufPYxgaJpZM4WHOhH>

.

--

DimaVolo.ca <http://DimaVolo.ca?ref=email_sig>

[email protected]

(917) 512-3119 <9175123119>

|

|

Any URL you can share? I can pass that along to the Cloud team. |

|

@lpellegr Very nice to see this brought up. I've been facing the same pain for a while but always thought it would be closed as "won't fix". I have a quite extensive puppeteer setup on AWS Lambda and I've been playing around with running puppeteer on Firebase/Google Cloud Functions for a while, even before support for Node 8.10 was announced. You can check the hack I did back then here (unmaintained). A run a proxyfied authentication service (user logs in into my website, that in turn uses puppeteer to check if he can authenticate with the same credentials on a third-party website), where execution speed of puppeteer will directly affect the user experience. Nothing fancy like screenshots or PDF, just a login flow. Most of my architecture lives on Firebase, so it would be very convenient for me to run everything there, puppeteer included - this would help with the spaghetti-like fan-out architecture I'm forced to adopt due to Lambda limitations. However, the performance of GCF/FCF is so inferior compared to AWS Lambda that I cannot bring myself to make the switch. Even after support for specifying closer regions and Node 8.10 was released on FCF, a 2GB Cloud Function will still be less performant than a 1GB Lambda: ~4s vs 10+ seconds! And Lambda even has the handicap of having to decompress the chromium binary (0.7 seconds, see And from my extensive testing I can tell this is not due to cold-starts. I suspect the problem is more related in the differences between AWS and Google in the way the CPU shares and bandwidth are allocated in proportion to the amount of RAM defined. I can't be sure obviously, but I read a blog post a few months ago (can no longer find it) with very comprehensive tests on the big three (AWS, Google, Azure) that seem to reflect this suspicion - AWS is more "generous" in allocation. Obviously, this doesn't seem to be a problem of Switching to the no-frills approach of just writing triggers for Cloud Functions and listing dependencies (amazing work on this BTW) would make my life a lot easier. If only the performance was (a lot) better... |

|

Google Cloud PM here. Part of the slowness comes from the fact that the filesystem on Cloud Functions is read only. We asked the Chromium team for help to better understand how we could configure it to not try to write outside of |

|

@steren AWS has the same limitation, you only get a fixed 500MB on On the other hand GCF/FCF is memory-mapped:

So even if GCF was running on HDDs and Lambda on SSDs, it still wouldn't explain the huge discrepancies in performance we are seeing. |

|

So I just cooked up the simplest possible benchmark to test only the CPU (no disk I/O or networking). Here's what I came up with: const sieveOfErathosthenes = require('sieve-of-eratosthenes');

console.time('sieve');

console.log(sieveOfErathosthenes(33554432).length === 2063689);

console.timeEnd('sieve');I deployed this function on both AWS Lambda, and Firebase Cloud Functions (both using Node 8.10). Then I serially called the Lambda/Cloud Function and noted down the times. No warm-up was done.

The 1GB Lambda is on-par with the 2GB FCF - although with much more consistent timings and no errors. Weirdly enough, the errors reported on 1GB FCF were:

Not sure why that happens intermittently for a deterministic function. As for the 2GB FCF, the errors were:

Similar results are reported on papers such as (there are quite a few!):

PS: Sorry if this is unrelated to PPTR itself, I'm just trying to suggest that CPU performance could be an important factor that explains why puppeteer performs so badly under GCF/FCF. |

|

@alixaxel For sure CPU plays an important part. However, as Google team members said, CPU is not the cause of the issue here. If you look at To convince me I also made a test some weeks ago by creating a Docker image with a read/write filesystem and the puppeteer NPM dependency pre-installed, all, running in GCP kubernetes with nodes having a similar CPU allocation as a 2GB function. The results show acceptable times. Hope we can get a guidance soon about how to configure chrome headless to write to /tmp only with Cloud functions. Another solution seems to get access to the alpha container as a service feature on Cloud functions. In that case a simple solution could be to use a Docker image similar to the one I used with Kubernetes. Currently, it's my dream. Hope it can become a reality. |

|

Thank you for the tip on speeding up Puppeteer on FCF. Is there a way to test this function locally using I am getting the following error: which lists troubleshooting for Linux. How are people using MAC OS testing this implementation? |

|

I can confirm that this works. Before using |

|

@alixaxel I'm curious as to why |

|

@Robula Besides shipping with less resources, chrome-aws-lambda is a headless-only build. That by itself should already explain some gains, but if you read the discussion above, making |

|

Just wanted to add some details on how to run the code below locally (on Ubuntu, in my case) and on Firebase 👇 executablePath: await chromium.executablePathFirst, install Chromium, with your usual package manager (ex: {

"app": {

"firebase_chromium_exe_path": "/usr/bin/chromium-browser"

}

}

Then in your code, you can run const local_vars = functions.config()

[...]

executablePath: local_vars.app.firebase_chromium_exe_path || await chromium.executablePathTry it locally with It should now work on both environments ! 🎊 |

|

followed above trick, but getting this on Mac OS |

|

For Mac OS so, this makes it work locally on Mac |

|

Here are my benchmarks using Cloud Run, Cloud Functions and the Kubernetes/Any other server. Cloud run is 2x slower, Cloud functions are 6-10x slower compared to a normal always-on server. Tasks performed:

Benchmarks:Kubernetes/ServerMainly this would mean high availability, no cold start, though it defeats the purpose of serverless, the comparison is just to show how Cloud Functions are doing compared to this. Cloud RunIt's slower, and understandable. Got much more flexibility than Cloud Functions as well. Cloud FunctionsNever mind the cold start, it was extremely painful to watch. No matter what kind of optimizations are put, just opening the browser takes most of the time. If anyone runs a test with chrome-aws-lambda, it will be nice. |

|

@entrptaher pass me the test script and I'll see what I can do. I run https://checklyhq.com and run a ton of AWS Lambda based Puppeteer runs. |

|

I tested with puppeteersandbox (which is the one you have on aws lambda), and that reported me around 1000ms (endTime - startTime). A benchmark with ./curl-benchmark.py would be much nicer :D to look. I will also mention, All of them were allocated 512MB ram and at most 250-280MB were used. At first it were using less ram, but then started to increase on further deployments. Here you go, the code. I removed as many things as I could to keep it simple. index.jsconst puppeteer = require("puppeteer");

const scraper = async () => {

const browser = await puppeteer.launch({args: [

'--no-sandbox',

'--disable-setuid-sandbox',

'--disable-dev-shm-usage'

]});

const page = await browser.newPage();

await page.goto("https://example.com");

const title = await page.title();

await browser.close();

return title

};

exports.helloWorld = async (req, res) => {

const title = await scraper();

res.send({ title });

};package.json{

"name": "helloworld",

"version": "1.0.0",

"description": "Simple hello world sample in Node",

"main": "index.js",

"scripts": {

"start": "functions-framework --target=helloWorld"

},

"dependencies": {

"@google-cloud/functions-framework": "^1.3.2",

"puppeteer": "^2.0.0",

}

}Without functions-frameworkCloud FunctionsOn the previous benchmark, I was using functions-framework, which is a small overhead for handling requests on port 8080. Once again, here are the results, The benchmark doesn't change much even if you remove functions-framework. It gets 2 second faster. However this still does not justify the 4 second response, which is 4x time the normal aws response. Cloud RunI removed functions-framework and added express, which is a lower overhead. We can try vanilla js as well. Code: const express = require('express');

const app = express();

app.get("/", async (req, res) => {

const title = await scraper();

res.send({ title });

});

const port = process.env.PORT || 8080;

const server = app.listen(port, () => {

const details = server.address();

console.info(`server listening on ${details.port}`);

}); |

|

@entrptaher Great that you used https://puppeteersandbox.com Even without the curl benchmark, this shows a sub 1000ms execution on most runs. For those who want to give it a try, I saved this script with added timing: |

|

I used 512mb on my run. Having 1500mb will definitely have a greater effect but that kinda defeats the purpose of the whole benchmark thing on a example.com website. Can you try to benchmark with 512mb limit ? 😁 |

In order to get this to work locally on my mac, and on production deployment i had to check for the "local_vars.app" first or it would crash on production. Hope this helps someone else... |

|

I didn't do any deep profiling but at least in my case the bottleneck seems to be the CPU. I used Cloud Run, when deployed with single CPU duration was around 20 seconds, and once I allocated 2 CPUs, it was reduced to around 10 seconds, and on my computer (i7 with 4 cores) I get around 5 seconds. I published like this:

Unfortunately, seems you cannot increase Cloud Run containers allocated CPUs beyond 2. And for Cloud Functions I did not find any way to control the number of the CPUs allocated. |

|

Any updates on this from the Google Team or has anyone cracked this? I'm a first time user of puppeteer and trying to glue up puppeteer-core, puppeteer-extra, puppeteer-cluster, (and apparently now chrome-aws-lambda) in Firebase Functions and the performance is disappointing to say the least... |

|

So I nowhere saw any minimum system requirements or anything for running puppeteer in cloud function. So I tried running this in a 256MB memory cloud function for a simple HTML. But it almost always throws memory limit exception. Sample code: It rarely gave response in ~8s and most of the time it throw error saying So I debug this with logs and the memory issue always happens when 1> So what is a minimum system, req for puppeteer in cloud function (It's working in 150MB memory using docker in local) Any leads? |

|

@anand-prem The short answer is that 256MB is simply too little to run Puppeteer in Cloud Functions. I don't know how exactly your local docker is configured, but Puppeteer needs Chromium/Chrome which has more demanding memory requirements. Perhaps your local docker has access to your local Chrome which bypasses Chrome memory needs (?) You are probably exceeding the available memory once any attempt to render is made (therefore getting a crash when setting the content). |

This combination has worked the best for me! Running backstopJS on AWS ec2 free tier wouldn't complete the reference job until I added these parameters. |

|

We're marking this issue as unconfirmed because it has not had recent activity and we weren't able to confirm it yet. It will be closed if no further activity occurs within the next 30 days. |

|

We are closing this issue. If the issue still persists in the latest version of Puppeteer, please reopen the issue and update the description. We will try our best to accomodate it! |

I am experimenting Puppeteer on Cloud Functions.

After a few tests, I noticed that taking a page screenshot of https://google.com takes about 5 seconds on average when deployed on Google Cloud Functions infrastructure, while the same function tested locally (using

firebase serve) takes only 2 seconds.At first sight, I was thinking about a classical cold start issue. Unfortunately, after several consecutive calls, the results remain the same.

Is Puppeteer (transitively Chrome headless) so CPU-intensive that the best '2GB' Cloud Functions class is not powerful enough to achieve the same performance as a middle-class desktop?

Could something else explain the results I am getting? Are there any options that could help to get an execution time that is close to the local test?

Here is the code I use:

Deployed with Firebase Functions using NodeJS 8.

The text was updated successfully, but these errors were encountered: