diff --git a/README.md b/README.md

index 8a6bdc43a..cb8808221 100644

--- a/README.md

+++ b/README.md

@@ -2,180 +2,14 @@

A structural variation discovery pipeline for Illumina short-read whole-genome sequencing (WGS) data.

-## Table of Contents

-* [Requirements](#requirements)

-* [Citation](#citation)

-* [Acknowledgements](#acknowledgements)

-* [Quickstart](#quickstart)

-* [Pipeline Overview](#overview)

- * [Cohort mode](#cohort-mode)

- * [Single-sample mode](#single-sample-mode)

- * [gCNV model](#gcnv-training-overview)

- * [Generating a reference panel](#reference-panel-generation)

-* [Module Descriptions](#descriptions)

- * [GatherSampleEvidence](#gather-sample-evidence) - Raw callers and evidence collection

- * [EvidenceQC](#evidence-qc) - Batch QC

- * [TrainGCNV](#gcnv-training) - gCNV model creation

- * [GatherBatchEvidence](#gather-batch-evidence) - Batch evidence merging, BAF generation, and depth callers

- * [ClusterBatch](#cluster-batch) - Site clustering

- * [GenerateBatchMetrics](#generate-batch-metrics) - Site metrics

- * [FilterBatch](#filter-batch) - Filtering

- * [MergeBatchSites](#merge-batch-sites) - Cross-batch site merging

- * [GenotypeBatch](#genotype-batch) - Genotyping

- * [RegenotypeCNVs](#regenotype-cnvs) - Genotype refinement (optional)

- * [MakeCohortVcf](#make-cohort-vcf) - Cross-batch integration, complex event resolution, and VCF cleanup

- * [JoinRawCalls](#join-raw-calls) - Merges unfiltered calls across batches

- * [SVConcordance](#svconcordance) - Calculates genotype concordance with raw calls

- * [FilterGenotypes](#filter-genotypes) - Performs genotype filtering

- * [AnnotateVcf](#annotate-vcf) - Functional and allele frequency annotation

- * [Module 09](#module09) - QC and Visualization

- * Additional modules - Mosaic and de novo

-* [CI/CD](#cicd)

-* [Troubleshooting](#troubleshooting)

+For technical documentation on GATK-SV, including how to run the pipeline, please refer to our website.

-

-## Requirements

-

-

-### Deployment and execution:

-* A [Google Cloud](https://cloud.google.com/) account.

-* A workflow execution system supporting the [Workflow Description Language](https://openwdl.org/) (WDL), either:

- * [Cromwell](https://github.com/broadinstitute/cromwell) (v36 or higher). A dedicated server is highly recommended.

- * or [Terra](https://terra.bio/) (note preconfigured GATK-SV workflows are not yet available for this platform)

-* Recommended: [cromshell](https://github.com/broadinstitute/cromshell) for interacting with a dedicated Cromwell server.

-* Recommended: [WOMtool](https://cromwell.readthedocs.io/en/stable/WOMtool/) for validating WDL/json files.

-

-#### Alternative backends

-Because GATK-SV has been tested only on the Google Cloud Platform (GCP), we are unable to provide specific guidance or support for other execution platforms including HPC clusters and AWS. Contributions from the community to improve portability between backends will be considered on a case-by-case-basis. We ask contributors to please adhere to the following guidelines when submitting issues and pull requests:

-

-1. Code changes must be functionally equivalent on GCP backends, i.e. not result in changed output

-2. Increases to cost and runtime on GCP backends should be minimal

-3. Avoid adding new inputs and tasks to workflows. Simpler changes are more likely to be approved, e.g. small in-line changes to scripts or WDL task command sections

-4. Avoid introducing new code paths, e.g. conditional statements

-5. Additional backend-specific scripts, workflows, tests, and Dockerfiles will not be approved

-6. Changes to Dockerfiles may require extensive testing before approval

-

-We still encourage members of the community to adapt GATK-SV for non-GCP backends and share code on forked repositories. Here are a some considerations:

-* Refer to Cromwell's [documentation](https://cromwell.readthedocs.io/en/stable/backends/Backends/) for configuration instructions.

-* The handling and ordering of `glob` commands may differ between platforms.

-* Shell commands that are potentially destructive to input files (e.g. `rm`, `mv`, `tabix`) can cause unexpected behavior on shared filesystems. Enabling [copy localization](https://cromwell.readthedocs.io/en/stable/Configuring/#local-filesystem-options) may help to more closely replicate the behavior on GCP.

-* For clusters that do not support Docker, Singularity is an alternative. See [Cromwell documentation on Singularity](https://cromwell.readthedocs.io/en/stable/tutorials/Containers/#singularity).

-* The GATK-SV pipeline takes advantage of the massive parallelization possible in the cloud. Local backends may not have the resources to execute all of the workflows. Workflows that use fewer resources or that are less parallelized may be more successful. For instance, some users have been able to run [GatherSampleEvidence](#gather-sample-evidence) on a SLURM cluster.

-

-### Data:

-* Illumina short-read whole-genome CRAMs or BAMs, aligned to hg38 with [bwa-mem](https://github.com/lh3/bwa). BAMs must also be indexed.

-* Family structure definitions file in [PED format](#ped-format).

-

-#### PED file format

-The PED file format is described [here](https://gatk.broadinstitute.org/hc/en-us/articles/360035531972-PED-Pedigree-format). Note that GATK-SV imposes additional requirements:

-* The file must be tab-delimited.

-* The sex column must only contain 0, 1, or 2: 1=Male, 2=Female, 0=Other/Unknown. Sex chromosome aneuploidies (detected in [EvidenceQC](#evidence-qc)) should be entered as sex = 0.

-* All family, individual, and parental IDs must conform to the [sample ID requirements](#sampleids).

-* Missing parental IDs should be entered as 0.

-* Header lines are allowed if they begin with a # character.

-To validate the PED file, you may use `src/sv-pipeline/scripts/validate_ped.py -p pedigree.ped -s samples.list`.

-

-#### Sample Exclusion

-We recommend filtering out samples with a high percentage of improperly paired reads (>10% or an outlier for your data) as technical outliers prior to running [GatherSampleEvidence](#gather-sample-evidence). A high percentage of improperly paired reads may indicate issues with library prep, degradation, or contamination. Artifactual improperly paired reads could cause incorrect SV calls, and these samples have been observed to have longer runtimes and higher compute costs for [GatherSampleEvidence](#gather-sample-evidence).

-

-#### Sample ID requirements:

-

-Sample IDs must:

-* Be unique within the cohort

-* Contain only alphanumeric characters and underscores (no dashes, whitespace, or special characters)

-

-Sample IDs should not:

-* Contain only numeric characters

-* Be a substring of another sample ID in the same cohort

-* Contain any of the following substrings: `chr`, `name`, `DEL`, `DUP`, `CPX`, `CHROM`

-

-The same requirements apply to family IDs in the PED file, as well as batch IDs and the cohort ID provided as workflow inputs.

-

-Sample IDs are provided to [GatherSampleEvidence](#gather-sample-evidence) directly and need not match sample names from the BAM/CRAM headers. `GetSampleID.wdl` can be used to fetch BAM sample IDs and also generates a set of alternate IDs that are considered safe for this pipeline; alternatively, [this script](https://github.com/talkowski-lab/gnomad_sv_v3/blob/master/sample_id/convert_sample_ids.py) transforms a list of sample IDs to fit these requirements. Currently, sample IDs can be replaced again in [GatherBatchEvidence](#gather-batch-evidence) - to do so, set the parameter `rename_samples = True` and provide updated sample IDs via the `samples` parameter.

-

-The following inputs will need to be updated with the transformed sample IDs:

-* Sample ID list for [GatherSampleEvidence](#gather-sample-evidence) or [GatherBatchEvidence](#gather-batch-evidence)

-* PED file

-

-

-## Citation

-Please cite the following publication:

-[Collins, Brand, et al. 2020. "A structural variation reference for medical and population genetics." Nature 581, 444-451.](https://doi.org/10.1038/s41586-020-2287-8)

-

-Additional references:

-[Werling et al. 2018. "An analytical framework for whole-genome sequence association studies and its implications for autism spectrum disorder." Nature genetics 50.5, 727-736.](http://dx.doi.org/10.1038/s41588-018-0107-y)

-

-

-## Acknowledgements

-The following resources were produced using data from the All of Us Research Program and have been approved by the Program for public dissemination:

-

-* Genotype filtering model: "aou_recalibrate_gq_model_file" in "inputs/values/resources_hg38.json"

-

-The All of Us Research Program is supported by the National Institutes of Health, Office of the Director: Regional Medical Centers: 1 OT2 OD026549; 1 OT2 OD026554; 1 OT2 OD026557; 1 OT2 OD026556; 1 OT2 OD026550; 1 OT2 OD 026552; 1 OT2 OD026553; 1 OT2 OD026548; 1 OT2 OD026551; 1 OT2 OD026555; IAA #: AOD 16037; Federally Qualified Health Centers: HHSN 263201600085U; Data and Research Center: 5 U2C OD023196; Biobank: 1 U24 OD023121; The Participant Center: U24 OD023176; Participant Technology Systems Center: 1 U24 OD023163; Communications and Engagement: 3 OT2 OD023205; 3 OT2 OD023206; and Community Partners: 1 OT2 OD025277; 3 OT2 OD025315; 1 OT2 OD025337; 1 OT2 OD025276. In addition, the All of Us Research Program would not be possible without the partnership of its participants.

-

-

-## Quickstart

-

-#### WDLs

-There are two scripts for running the full pipeline:

-* `wdl/GATKSVPipelineBatch.wdl`: Runs GATK-SV on a batch of samples.

-* `wdl/GATKSVPipelineSingleSample.wdl`: Runs GATK-SV on a single sample, given a reference panel

-

-#### Building inputs

-Example workflow inputs can be found in `/inputs`. Build using `scripts/inputs/build_default_inputs.sh`, which

-generates input jsons in `/inputs/build`. All required resources are available in public

-Google buckets.

-

-#### MELT

-**Important**: MELT has been replaced with [Scramble](https://github.com/GeneDx/scramble) for mobile element calling. While it is still possible to run GATK-SV with MELT, we no longer support it as a caller. It will be fully deprecated in the future.

-

-Due to licensing restrictions, we cannot redistribute MELT binaries or input files, including the docker image. Some default input files contain MELT inputs that are NOT public (see [Requirements](#requirements)) including:

-

-* `GATKSVPipelineSingleSample.melt_docker` and `GATKSVPipelineBatch.melt_docker` - MELT docker URI (see [Docker readme](https://github.com/talkowski-lab/gatk-sv-v1/blob/master/dockerfiles/README.md))

-* `GATKSVPipelineSingleSample.ref_std_melt_vcfs` - Standardized MELT VCFs ([GatherBatchEvidence](#gather-batch-evidence))

-

-The input values are provided only as placeholders. In some workflows, MELT must be enabled with appropriate settings, by providing optional MELT inputs and/or with an explicit option e.g. `GATKSVPipelineBatch.use_melt` to `true`. We do not recommend running both Scramble and MELT together.

-

-#### Execution

-We recommend running the pipeline on a dedicated [Cromwell](https://github.com/broadinstitute/cromwell) server with a [cromshell](https://github.com/broadinstitute/cromshell) client. A batch run can be started with the following commands:

-

-```

-> mkdir gatksv_run && cd gatksv_run

-> mkdir wdl && cd wdl

-> cp $GATK_SV_ROOT/wdl/*.wdl .

-> zip dep.zip *.wdl

-> cd ..

-> bash scripts/inputs/build_default_inputs.sh -d $GATK_SV_ROOT

-> cp $GATK_SV_ROOT/inputs/build/ref_panel_1kg/test/GATKSVPipelineBatch/GATKSVPipelineBatch.json GATKSVPipelineBatch.my_run.json

-> cromshell submit wdl/GATKSVPipelineBatch.wdl GATKSVPipelineBatch.my_run.json cromwell_config.json wdl/dep.zip

-```

-

-where `cromwell_config.json` is a Cromwell [workflow options file](https://cromwell.readthedocs.io/en/stable/wf_options/Overview/). Note users will need to re-populate batch/sample-specific parameters (e.g. BAMs and sample IDs).

-

-## Pipeline Overview

-The pipeline consists of a series of modules that perform the following:

-* [GatherSampleEvidence](#gather-sample-evidence): SV evidence collection, including calls from a configurable set of algorithms (Manta, Scramble, and Wham), read depth (RD), split read positions (SR), and discordant pair positions (PE).

-* [EvidenceQC](#evidence-qc): Dosage bias scoring and ploidy estimation

-* [GatherBatchEvidence](#gather-batch-evidence): Copy number variant calling using cn.MOPS and GATK gCNV; B-allele frequency (BAF) generation; call and evidence aggregation

-* [ClusterBatch](#cluster-batch): Variant clustering

-* [GenerateBatchMetrics](#generate-batch-metrics): Variant filtering metric generation

-* [FilterBatch](#filter-batch): Variant filtering; outlier exclusion

-* [GenotypeBatch](#genotype-batch): Genotyping

-* [MakeCohortVcf](#make-cohort-vcf): Cross-batch integration; complex variant resolution and re-genotyping; vcf cleanup

-* [JoinRawCalls](#join-raw-calls): Merges unfiltered calls across batches

-* [SVConcordance](#svconcordance): Calculates genotype concordance with raw calls

-* [FilterGenotypes](#filter-genotypes): Performs genotype filtering

-* [AnnotateVcf](#annotate-vcf): Annotations, including functional annotation, allele frequency (AF) annotation and AF annotation with external population callsets

-* [Module 09](#module09): Visualization, including scripts that generates IGV screenshots and rd plots.

-* Additional modules to be added: de novo and mosaic scripts

-

-

-Repository structure:

+## Repository structure

+* `/carrot`: [Carrot](https://github.com/broadinstitute/carrot) tests

* `/dockerfiles`: Resources for building pipeline docker images

* `/inputs`: files for generating workflow inputs

* `/templates`: Input json file templates

* `/values`: Input values used to populate templates

-* `/wdl`: WDLs running the pipeline. There is a master WDL for running each module, e.g. `ClusterBatch.wdl`.

* `/scripts`: scripts for running tests, building dockers, and analyzing cromwell metadata files

* `/src`: main pipeline scripts

* `/RdTest`: scripts for depth testing

@@ -183,435 +17,9 @@ Repository structure:

* `/svqc`: Python module for checking that pipeline metrics fall within acceptable limits

* `/svtest`: Python module for generating various summary metrics from module outputs

* `/svtk`: Python module of tools for SV-related datafile parsing and analysis

- * `/WGD`: whole-genome dosage scoring scripts

-

-

-## Cohort mode

-A minimum cohort size of 100 is required, and a roughly equal number of males and females is recommended. For modest cohorts (~100-500 samples), the pipeline can be run as a single batch using `GATKSVPipelineBatch.wdl`.

-

-For larger cohorts, samples should be split up into batches of about 100-500 samples. Refer to the [Batching](#batching) section for further guidance on creating batches.

-

-The pipeline should be executed as follows:

-* Modules [GatherSampleEvidence](#gather-sample-evidence) and [EvidenceQC](#evidence-qc) can be run on arbitrary cohort partitions

-* Modules [GatherBatchEvidence](#gather-batch-evidence), [ClusterBatch](#cluster-batch), [GenerateBatchMetrics](#generate-batch-metrics), and [FilterBatch](#filter-batch) are run separately per batch

-* [GenotypeBatch](#genotype-batch) is run separately per batch, using filtered variants ([FilterBatch](#filter-batch) output) combined across all batches

-* [MakeCohortVcf](#make-cohort-vcf) and beyond are run on all batches together

-

-Note: [GatherBatchEvidence](#gather-batch-evidence) requires a [trained gCNV model](#gcnv-training).

-

-#### Batching

-For larger cohorts, samples should be split up into batches of about 100-500 samples with similar characteristics. We recommend batching based on overall coverage and dosage score (WGD), which can be generated in [EvidenceQC](#evidence-qc). An example batching process is outlined below:

-1. Divide the cohort into PCR+ and PCR- samples

-2. Partition the samples by median coverage from [EvidenceQC](#evidence-qc), grouping samples with similar median coverage together. The end goal is to divide the cohort into roughly equal-sized batches of about 100-500 samples; if your partitions based on coverage are larger or uneven, you can partition the cohort further in the next step to obtain the final batches.

-3. Optionally, divide the samples further by dosage score (WGD) from [EvidenceQC](#evidence-qc), grouping samples with similar WGD score together, to obtain roughly equal-sized batches of about 100-500 samples

-4. Maintain a roughly equal sex balance within each batch, based on sex assignments from [EvidenceQC](#evidence-qc)

-

-

-## Single-sample mode

-`GATKSVPipelineSingleSample.wdl` runs the pipeline on a single sample using a fixed reference panel. An example run with reference panel containing 156 samples from the [NYGC 1000G Terra workspace](https://app.terra.bio/#workspaces/anvil-datastorage/1000G-high-coverage-2019) can be found in `inputs/build/NA12878/test` after [building inputs](#building-inputs)).

-

-## gCNV Training

-Both the cohort and single-sample modes use the [GATK-gCNV](https://gatk.broadinstitute.org/hc/en-us/articles/360035531152) depth calling pipeline, which requires a [trained model](#gcnv-training) as input. The samples used for training should be technically homogeneous and similar to the samples to be processed (i.e. same sample type, library prep protocol, sequencer, sequencing center, etc.). The samples to be processed may comprise all or a subset of the training set. For small, relatively homogenous cohorts, a single gCNV model is usually sufficient. If a cohort contains multiple data sources, we recommend training a separate model for each [batch](#batching) or group of batches with similar dosage score (WGD). The model may be trained on all or a subset of the samples to which it will be applied; a reasonable default is 100 randomly-selected samples from the batch (the random selection can be done as part of the workflow by specifying a number of samples to the `n_samples_subsample` input parameter in `/wdl/TrainGCNV.wdl`).

-

-## Generating a reference panel

-New reference panels can be generated easily from a single run of the `GATKSVPipelineBatch` workflow. If using a Cromwell server, we recommend copying the outputs to a permanent location by adding the following option to the workflow configuration file:

-```

- "final_workflow_outputs_dir" : "gs://my-outputs-bucket",

- "use_relative_output_paths": false,

-```

-Here is an example of how to generate workflow input jsons from `GATKSVPipelineBatch` workflow metadata:

-```

-> cromshell -t60 metadata 38c65ca4-2a07-4805-86b6-214696075fef > metadata.json

-> python scripts/inputs/create_test_batch.py \

- --execution-bucket gs://my-exec-bucket \

- --final-workflow-outputs-dir gs://my-outputs-bucket \

- metadata.json \

- > inputs/values/my_ref_panel.json

-> # Build test files for batched workflows

-> python scripts/inputs/build_inputs.py \

- inputs/values \

- inputs/templates/test \

- inputs/build/my_ref_panel/test \

- -a '{ "test_batch" : "ref_panel_1kg" }'

-> # Build test files for the single-sample workflow

-> python scripts/inputs/build_inputs.py \

- inputs/values \

- inputs/templates/test/GATKSVPipelineSingleSample \

- inputs/build/NA19240/test_my_ref_panel \

- -a '{ "single_sample" : "test_single_sample_NA19240", "ref_panel" : "my_ref_panel" }'

-> # Build files for a Terra workspace

-> python scripts/inputs/build_inputs.py \

- inputs/values \

- inputs/templates/terra_workspaces/single_sample \

- inputs/build/NA12878/terra_my_ref_panel \

- -a '{ "single_sample" : "test_single_sample_NA12878", "ref_panel" : "my_ref_panel" }'

-```

-Note that the inputs to `GATKSVPipelineBatch` may be used as resources for the reference panel and therefore should also be in a permanent location.

-

-## Module Descriptions

-The following sections briefly describe each module and highlights inter-dependent input/output files. Note that input/output mappings can also be gleaned from `GATKSVPipelineBatch.wdl`, and example input templates for each module can be found in `/inputs/templates/test`.

-

-## GatherSampleEvidence

-*Formerly Module00a*

-

-Runs raw evidence collection on each sample with the following SV callers: [Manta](https://github.com/Illumina/manta), [Wham](https://github.com/zeeev/wham), [Scramble](https://github.com/GeneDx/scramble), and/or [MELT](https://melt.igs.umaryland.edu/). For guidance on pre-filtering prior to `GatherSampleEvidence`, refer to the [Sample Exclusion](#sample-exclusion) section.

-

-The `scramble_clusters` and `scramble_table` are generated as outputs for troubleshooting purposes but not consumed by any downstream workflows.

-

-Note: a list of sample IDs must be provided. Refer to the [sample ID requirements](#sampleids) for specifications of allowable sample IDs. IDs that do not meet these requirements may cause errors.

-

-#### Inputs:

-* Per-sample BAM or CRAM files aligned to hg38. Index files (`.bai`) must be provided if using BAMs.

-

-#### Outputs:

-* Caller VCFs (Manta, Scramble, MELT, and/or Wham)

-* Binned read counts file

-* Split reads (SR) file

-* Discordant read pairs (PE) file

-* Scramble intermediate clusters file and table (not needed downstream)

-

-## EvidenceQC

-*Formerly Module00b*

-

-Runs ploidy estimation, dosage scoring, and optionally VCF QC. The results from this module can be used for QC and batching.

-

-For large cohorts, this workflow can be run on arbitrary cohort partitions of up to about 500 samples. Afterwards, we recommend using the results to divide samples into smaller batches (~100-500 samples) with ~1:1 male:female ratio. Refer to the [Batching](#batching) section for further guidance on creating batches.

-

-We also recommend using sex assignments generated from the ploidy estimates and incorporating them into the PED file, with sex = 0 for sex aneuploidies.

-

-#### Prerequisites:

-* [GatherSampleEvidence](#gather-sample-evidence)

-

-#### Inputs:

-* Read count files ([GatherSampleEvidence](#gather-sample-evidence))

-* (Optional) SV call VCFs ([GatherSampleEvidence](#gather-sample-evidence))

-

-#### Outputs:

-* Per-sample dosage scores with plots

-* Median coverage per sample

-* Ploidy estimates, sex assignments, with plots

-* (Optional) Outlier samples detected by call counts

-

-#### Preliminary Sample QC

-The purpose of sample filtering at this stage after EvidenceQC is to prevent very poor quality samples from interfering with the results for the rest of the callset. In general, samples that are borderline are okay to leave in, but you should choose filtering thresholds to suit the needs of your cohort and study. There will be future opportunities (as part of [FilterBatch](#filter-batch)) for filtering before the joint genotyping stage if necessary. Here are a few of the basic QC checks that we recommend:

-* Look at the X and Y ploidy plots, and check that sex assignments match your expectations. If there are discrepancies, check for sample swaps and update your PED file before proceeding.

-* Look at the dosage score (WGD) distribution and check that it is centered around 0 (the distribution of WGD for PCR- samples is expected to be slightly lower than 0, and the distribution of WGD for PCR+ samples is expected to be slightly greater than 0. Refer to the [gnomAD-SV paper](https://doi.org/10.1038/s41586-020-2287-8) for more information on WGD score). Optionally filter outliers.

-* Look at the low outliers for each SV caller (samples with much lower than typical numbers of SV calls per contig for each caller). An empty low outlier file means there were no outliers below the median and no filtering is necessary. Check that no samples had zero calls.

-* Look at the high outliers for each SV caller and optionally filter outliers; samples with many more SV calls than average may be poor quality.

-* Remove samples with autosomal aneuploidies based on the per-batch binned coverage plots of each chromosome.

-

-

-## TrainGCNV

-Trains a [gCNV](https://gatk.broadinstitute.org/hc/en-us/articles/360035531152) model for use in [GatherBatchEvidence](#gather-batch-evidence). The WDL can be found at `/wdl/TrainGCNV.wdl`. See the [gCNV training overview](#gcnv-training-overview) for more information.

-

-#### Prerequisites:

-* [GatherSampleEvidence](#gather-sample-evidence)

-* (Recommended) [EvidenceQC](#evidence-qc)

-

-#### Inputs:

-* Read count files ([GatherSampleEvidence](#gather-sample-evidence))

-

-#### Outputs:

-* Contig ploidy model tarball

-* gCNV model tarballs

-

-

-## GatherBatchEvidence

-*Formerly Module00c*

-

-Runs CNV callers ([cn.MOPS](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3351174/), [GATK-gCNV](https://gatk.broadinstitute.org/hc/en-us/articles/360035531152)) and combines single-sample raw evidence into a batch. See [above](#cohort-mode) for more information on batching.

-

-#### Prerequisites:

-* [GatherSampleEvidence](#gather-sample-evidence)

-* (Recommended) [EvidenceQC](#evidence-qc)

-* [gCNV training](#gcnv-training)

-

-#### Inputs:

-* PED file (updated with [EvidenceQC](#evidence-qc) sex assignments, including sex = 0 for sex aneuploidies. Calls will not be made on sex chromosomes when sex = 0 in order to avoid generating many confusing calls or upsetting normalized copy numbers for the batch.)

-* Read count, BAF, PE, SD, and SR files ([GatherSampleEvidence](#gather-sample-evidence))

-* Caller VCFs ([GatherSampleEvidence](#gather-sample-evidence))

-* Contig ploidy model and gCNV model files ([gCNV training](#gcnv-training))

-

-#### Outputs:

-* Combined read count matrix, SR, PE, and BAF files

-* Standardized call VCFs

-* Depth-only (DEL/DUP) calls

-* Per-sample median coverage estimates

-* (Optional) Evidence QC plots

-

-

-## ClusterBatch

-*Formerly Module01*

-

-Clusters SV calls across a batch.

-

-#### Prerequisites:

-* [GatherBatchEvidence](#gather-batch-evidence)

-

-#### Inputs:

-* Standardized call VCFs ([GatherBatchEvidence](#gather-batch-evidence))

-* Depth-only (DEL/DUP) calls ([GatherBatchEvidence](#gather-batch-evidence))

-

-#### Outputs:

-* Clustered SV VCFs

-* Clustered depth-only call VCF

-

-

-## GenerateBatchMetrics

-*Formerly Module02*

-

-Generates variant metrics for filtering.

-

-#### Prerequisites:

-* [ClusterBatch](#cluster-batch)

-

-#### Inputs:

-* Combined read count matrix, SR, PE, and BAF files ([GatherBatchEvidence](#gather-batch-evidence))

-* Per-sample median coverage estimates ([GatherBatchEvidence](#gather-batch-evidence))

-* Clustered SV VCFs ([ClusterBatch](#cluster-batch))

-* Clustered depth-only call VCF ([ClusterBatch](#cluster-batch))

-

-#### Outputs:

-* Metrics file

-

-

-## FilterBatch

-*Formerly Module03*

-

-Filters poor quality variants and filters outlier samples. This workflow can be run all at once with the WDL at `wdl/FilterBatch.wdl`, or it can be run in two steps to enable tuning of outlier filtration cutoffs. The two subworkflows are:

-1. FilterBatchSites: Per-batch variant filtration. Visualize SV counts per sample per type to help choose an IQR cutoff for outlier filtering, and preview outlier samples for a given cutoff

-2. FilterBatchSamples: Per-batch outlier sample filtration; provide an appropriate `outlier_cutoff_nIQR` based on the SV count plots and outlier previews from step 1. Note that not removing high outliers can result in increased compute cost and a higher false positive rate in later steps.

-

-#### Prerequisites:

-* [GenerateBatchMetrics](#generate-batch-metrics)

-

-#### Inputs:

-* Batch PED file

-* Metrics file ([GenerateBatchMetrics](#generate-batch-metrics))

-* Clustered SV and depth-only call VCFs ([ClusterBatch](#cluster-batch))

-

-#### Outputs:

-* Filtered SV (non-depth-only a.k.a. "PESR") VCF with outlier samples excluded

-* Filtered depth-only call VCF with outlier samples excluded

-* Random forest cutoffs file

-* PED file with outlier samples excluded

-

-

-## MergeBatchSites

-*Formerly MergeCohortVcfs*

-

-Combines filtered variants across batches. The WDL can be found at: `/wdl/MergeBatchSites.wdl`.

-

-#### Prerequisites:

-* [FilterBatch](#filter-batch)

-

-#### Inputs:

-* List of filtered PESR VCFs ([FilterBatch](#filter-batch))

-* List of filtered depth VCFs ([FilterBatch](#filter-batch))

-

-#### Outputs:

-* Combined cohort PESR and depth VCFs

-

-

-## GenotypeBatch

-*Formerly Module04*

-

-Genotypes a batch of samples across unfiltered variants combined across all batches.

-

-#### Prerequisites:

-* [FilterBatch](#filter-batch)

-* [MergeBatchSites](#merge-batch-sites)

-

-#### Inputs:

-* Batch PESR and depth VCFs ([FilterBatch](#filter-batch))

-* Cohort PESR and depth VCFs ([MergeBatchSites](#merge-batch-sites))

-* Batch read count, PE, and SR files ([GatherBatchEvidence](#gather-batch-evidence))

-

-#### Outputs:

-* Filtered SV (non-depth-only a.k.a. "PESR") VCF with outlier samples excluded

-* Filtered depth-only call VCF with outlier samples excluded

-* PED file with outlier samples excluded

-* List of SR pass variants

-* List of SR fail variants

-* (Optional) Depth re-genotyping intervals list

-

-

-## RegenotypeCNVs

-*Formerly Module04b*

-

-Re-genotypes probable mosaic variants across multiple batches.

-

-#### Prerequisites:

-* [GenotypeBatch](#genotype-batch)

-

-#### Inputs:

-* Per-sample median coverage estimates ([GatherBatchEvidence](#gather-batch-evidence))

-* Pre-genotyping depth VCFs ([FilterBatch](#filter-batch))

-* Batch PED files ([FilterBatch](#filter-batch))

-* Cohort depth VCF ([MergeBatchSites](#merge-batch-sites))

-* Genotyped depth VCFs ([GenotypeBatch](#genotype-batch))

-* Genotyped depth RD cutoffs file ([GenotypeBatch](#genotype-batch))

-

-#### Outputs:

-* Re-genotyped depth VCFs

-

-

-## MakeCohortVcf

-*Formerly Module0506*

-

-Combines variants across multiple batches, resolves complex variants, re-genotypes, and performs final VCF clean-up.

-

-#### Prerequisites:

-* [GenotypeBatch](#genotype-batch)

-* (Optional) [RegenotypeCNVs](#regenotype-cnvs)

-

-#### Inputs:

-* RD, PE and SR file URIs ([GatherBatchEvidence](#gather-batch-evidence))

-* Batch filtered PED file URIs ([FilterBatch](#filter-batch))

-* Genotyped PESR VCF URIs ([GenotypeBatch](#genotype-batch))

-* Genotyped depth VCF URIs ([GenotypeBatch](#genotype-batch) or [RegenotypeCNVs](#regenotype-cnvs))

-* SR pass variant file URIs ([GenotypeBatch](#genotype-batch))

-* SR fail variant file URIs ([GenotypeBatch](#genotype-batch))

-* Genotyping cutoff file URIs ([GenotypeBatch](#genotype-batch))

-* Batch IDs

-* Sample ID list URIs

-

-#### Outputs:

-* Finalized "cleaned" VCF and QC plots

-

-## JoinRawCalls

-

-Merges raw unfiltered calls across batches. Concordance between these genotypes and the joint call set usually can be indicative of variant quality and is used downstream for genotype filtering.

-

-#### Prerequisites:

-* [ClusterBatch](#cluster-batch)

-

-#### Inputs:

-* Clustered Manta, Wham, depth, Scramble, and/or MELT VCF URIs ([ClusterBatch](#cluster-batch))

-* PED file

-* Reference sequence

-

-#### Outputs:

-* VCF of clustered raw calls

-* Ploidy table

-

-## SVConcordance

-

-Computes genotype concordance metrics between all variants in the joint call set and raw calls.

-

-#### Prerequisites:

-* [MakeCohortVcf](#make-cohort-vcf)

-* [JoinRawCalls](#join-raw-calls)

-

-#### Inputs:

-* Cleaned ("eval") VCF URI ([MakeCohortVcf](#make-cohort-vcf))

-* Joined raw call ("truth") VCF URI ([JoinRawCalls](#join-raw-calls))

-* Reference dictionary URI

-

-#### Outputs:

-* VCF with concordance annotations

-

-## FilterGenotypes

-

-Performs genotype quality recalibration using a machine learning model based on [xgboost](https://github.com/dmlc/xgboost), and filters genotypes.

-

-The ML model uses the following features:

-

-* Genotype properties:

- * Non-reference and no-call allele counts

- * Genotype quality (GQ)

- * Supporting evidence types (EV) and respective genotype qualities (PE_GQ, SR_GQ, RD_GQ)

- * Raw call concordance (CONC_ST)

-* Variant properties:

- * Variant type (SVTYPE) and size (SVLEN)

- * FILTER status

- * Calling algorithms (ALGORITHMS)

- * Supporting evidence types (EVIDENCE)

- * Two-sided SR support flag (BOTHSIDES_SUPPORT)

- * Evidence overdispersion flag (PESR_GT_OVERDISPERSION)

- * SR noise flag (HIGH_SR_BACKGROUND)

- * Raw call concordance (STATUS, NON_REF_GENOTYPE_CONCORDANCE, VAR_PPV, VAR_SENSITIVITY, TRUTH_AF)

-* Reference context with respect to UCSC Genome Browser tracks:

- * RepeatMasker

- * Segmental duplications

- * Simple repeats

- * K-mer mappability (umap_s100 and umap_s24)

-

-For ease of use, we provide a model pre-trained on high-quality data with truth data derived from long-read calls:

-```

-gs://gatk-sv-resources-public/hg38/v0/sv-resources/resources/v1/gatk-sv-recalibrator.aou_phase_1.v1.model

-```

-See the SV "Genotype Filter" section on page 34 of the [All of Us Genomic Quality Report C2022Q4R9 CDR v7](https://support.researchallofus.org/hc/en-us/articles/4617899955092-All-of-Us-Genomic-Quality-Report-ARCHIVED-C2022Q4R9-CDR-v7) for further details on model training.

-

-All valid genotypes are annotated with a "scaled logit" (SL) score, which is rescaled to non-negative adjusted GQs on [1, 99]. Note that the rescaled GQs should *not* be interpreted as probabilities. Original genotype qualities are retained in the OGQ field.

-

-A more positive SL score indicates higher probability that the given genotype is not homozygous for the reference allele. Genotypes are therefore filtered using SL thresholds that depend on SV type and size. This workflow also generates QC plots using the [MainVcfQc](https://github.com/broadinstitute/gatk-sv/blob/main/wdl/MainVcfQc.wdl) workflow to review call set quality (see below for recommended practices).

-

-This workflow can be run in one of two modes:

-

-1. (Recommended) The user explicitly provides a set of SL cutoffs through the `sl_filter_args` parameter, e.g.

- ```

- "--small-del-threshold 93 --medium-del-threshold 150 --small-dup-threshold -51 --medium-dup-threshold -4 --ins-threshold -13 --inv-threshold -19"

- ```

- Genotypes with SL scores less than the cutoffs are set to no-call (`./.`). The above values were taken directly from Appendix N of the [All of Us Genomic Quality Report C2022Q4R9 CDR v7 ](https://support.researchallofus.org/hc/en-us/articles/4617899955092-All-of-Us-Genomic-Quality-Report-ARCHIVED-C2022Q4R9-CDR-v7). Users should adjust the thresholds depending on data quality and desired accuracy. Please see the arguments in [this script](https://github.com/broadinstitute/gatk-sv/blob/main/src/sv-pipeline/scripts/apply_sl_filter.py) for all available options.

-

-2. (Advanced) The user provides truth labels for a subset of non-reference calls, and SL cutoffs are automatically optimized. These truth labels should be provided as a json file in the following format:

- ```

- {

- "sample_1": {

- "good_variant_ids": ["variant_1", "variant_3"],

- "bad_variant_ids": ["variant_5", "variant_10"]

- },

- "sample_2": {

- "good_variant_ids": ["variant_2", "variant_13"],

- "bad_variant_ids": ["variant_8", "variant_11"]

- }

- }

- ```

- where "good_variant_ids" and "bad_variant_ids" are lists of variant IDs corresponding to non-reference (i.e. het or hom-var) sample genotypes that are true positives and false positives, respectively. SL cutoffs are optimized by maximizing the [F-score](https://en.wikipedia.org/wiki/F-score) with "beta" parameter `fmax_beta`, which modulates the weight given to precision over recall (lower values give higher precision).

-

-In both modes, the workflow additionally filters variants based on the "no-call rate", the proportion of genotypes that were filtered in a given variant. Variants exceeding the `no_call_rate_cutoff` are assigned a `HIGH_NCR` filter status.

-

-We recommend users observe the following basic criteria to assess the overall quality of the filtered call set:

-

-* Number of PASS variants (excluding BND) between 7,000 and 11,000.

-* At least 75% of variants in Hardy-Weinberg equilibrium (HWE). Note that this could be lower, depending on how how closely the cohort adheres to the assumptions of the Hardy-Weinberg model. However, HWE is expected to at least improve after filtering.

-* Low *de novo* inheritance rate (if applicable), typically 5-10%.

-

-These criteria can be assessed from the plots in the `main_vcf_qc_tarball` output, which is generated by default.

-

-#### Prerequisites:

-* [SVConcordance](#svconcordance)

-

-#### Inputs:

-* VCF with genotype concordance annotations URI ([SVConcordance](#svconcordance))

-* Ploidy table URI ([JoinRawCalls](#join-raw-calls))

-* GQRecalibrator model URI

-* Either a set of SL cutoffs or truth labels

-

-#### Outputs:

-* Filtered VCF

-* Call set QC plots (optional)

-* Optimized SL cutoffs with filtering QC plots and data tables (if running mode [2] with truth labels)

-* VCF with only SL annotation and GQ recalibration (before filtering)

-

-## AnnotateVcf

-*Formerly Module08Annotation*

-

-Add annotations, such as the inferred function and allele frequencies of variants, to final VCF.

-

-Annotations methods include:

-* Functional annotation - The GATK tool [SVAnnotate](https://gatk.broadinstitute.org/hc/en-us/articles/13832752531355-SVAnnotate) is used to annotate SVs with inferred functional consequence on protein-coding regions, regulatory regions such as UTR and promoters, and other non-coding elements.

-* Allele Frequency annotation - annotate SVs with their allele frequencies across all samples, and samples of specific sex, as well as specific sub-populations.

-* Allele Frequency annotation with external callset - annotate SVs with the allele frequencies of their overlapping SVs in another callset, eg. gnomad SV callset.

-

-## Module 09 (in development)

-Visualize SVs with [IGV](http://software.broadinstitute.org/software/igv/) screenshots and read depth plots.

-

-Visualization methods include:

-* RD Visualization - generate RD plots across all samples, ideal for visualizing large CNVs.

-* IGV Visualization - generate IGV plots of each SV for individual sample, ideal for visualizing de novo small SVs.

-* Module09.visualize.wdl - generate RD plots and IGV plots, and combine them for easy review.

+ * `/WGD`: whole-genome dosage score scripts

+* `/wdl`: WDLs running the pipeline. There is a master WDL for running each module, e.g. `ClusterBatch.wdl`.

+* `/website`: website code

## CI/CD

This repository is maintained following the norms of

@@ -620,25 +28,3 @@ GATK-SV CI/CD is developed as a set of Github Actions

workflows that are available under the `.github/workflows`

directory. Please refer to the [workflow's README](.github/workflows/README.md)

for their current coverage and setup.

-

-## Troubleshooting

-

-### VM runs out of memory or disk

-* Default pipeline settings are tuned for batches of 100 samples. Larger batches or cohorts may require additional VM resources. Most runtime attributes can be modified through the `RuntimeAttr` inputs. These are formatted like this in the json:

-```

-"MyWorkflow.runtime_attr_override": {

- "disk_gb": 100,

- "mem_gb": 16

-},

-```

-Note that a subset of the struct attributes can be specified. See `wdl/Structs.wdl` for available attributes.

-

-

-### Calculated read length causes error in MELT workflow

-

-Example error message from `GatherSampleEvidence.MELT.GetWgsMetrics`:

-```

-Exception in thread "main" java.lang.ArrayIndexOutOfBoundsException: The requested index 701766 is out of counter bounds. Possible cause of exception can be wrong READ_LENGTH parameter (much smaller than actual read length)

-```

-

-This error message was observed for a sample with an average read length of 117, but for which half the reads were of length 90 and half were of length 151. As a workaround, override the calculated read length by providing a `read_length` input of 151 (or the expected read length for the sample in question) to `GatherSampleEvidence`.

diff --git a/wdl/GenotypeBatch.wdl b/wdl/GenotypeBatch.wdl

index 39855a81e..04343733c 100644

--- a/wdl/GenotypeBatch.wdl

+++ b/wdl/GenotypeBatch.wdl

@@ -28,7 +28,7 @@ workflow GenotypeBatch {

File? pesr_exclude_list # Required unless skipping training

File splitfile

File? splitfile_index

- String? reference_build #hg19 or hg38, Required unless skipping training

+ String? reference_build # Must be hg38, Required unless skipping training

File bin_exclude

File ref_dict

# If all specified, training will be skipped (for single sample pipeline)

diff --git a/website/docs/acknowledgements.md b/website/docs/acknowledgements.md

new file mode 100644

index 000000000..4ee89fbdf

--- /dev/null

+++ b/website/docs/acknowledgements.md

@@ -0,0 +1,18 @@

+---

+title: Acknowledgements

+description: Acknowledgements

+sidebar_position: 10

+---

+

+The following resources were produced using data from the [All of Us Research Program](https://allofus.nih.gov/)

+and have been approved by the Program for public dissemination:

+

+* Genotype filtering model: "aou_recalibrate_gq_model_file" in "inputs/values/resources_hg38.json"

+

+The All of Us Research Program is supported by the National Institutes of Health, Office of the Director: Regional

+Medical Centers: 1 OT2 OD026549; 1 OT2 OD026554; 1 OT2 OD026557; 1 OT2 OD026556; 1 OT2 OD026550; 1 OT2 OD 026552; 1

+OT2 OD026553; 1 OT2 OD026548; 1 OT2 OD026551; 1 OT2 OD026555; IAA #: AOD 16037; Federally Qualified Health Centers:

+HHSN 263201600085U; Data and Research Center: 5 U2C OD023196; Biobank: 1 U24 OD023121; The Participant Center: U24

+OD023176; Participant Technology Systems Center: 1 U24 OD023163; Communications and Engagement: 3 OT2 OD023205; 3 OT2

+OD023206; and Community Partners: 1 OT2 OD025277; 3 OT2 OD025315; 1 OT2 OD025337; 1 OT2 OD025276. In addition, the All

+of Us Research Program would not be possible without the partnership of its participants.

diff --git a/website/docs/advanced/_category_.json b/website/docs/advanced/_category_.json

index 99c08a85c..c88d64045 100644

--- a/website/docs/advanced/_category_.json

+++ b/website/docs/advanced/_category_.json

@@ -1,6 +1,6 @@

{

"label": "Advanced Guides",

- "position": 8,

+ "position": 9,

"link": {

"type": "generated-index"

}

diff --git a/website/docs/advanced/build_inputs.md b/website/docs/advanced/build_inputs.md

index 2beb1993a..66fb6cb2f 100644

--- a/website/docs/advanced/build_inputs.md

+++ b/website/docs/advanced/build_inputs.md

@@ -1,7 +1,7 @@

---

title: Building inputs

description: Building work input json files

-sidebar_position: 1

+sidebar_position: 3

slug: build_inputs

---

@@ -43,8 +43,6 @@ You may run the following commands to get these example inputs.

└── test

```

-## Building inputs for specific use-cases (Advanced)

-

### Build for batched workflows

```shell

@@ -55,69 +53,3 @@ python scripts/inputs/build_inputs.py \

-a '{ "test_batch" : "ref_panel_1kg" }'

```

-

-### Generating a reference panel

-

-This section only applies to the single-sample mode.

-New reference panels can be generated from a single run of the

-`GATKSVPipelineBatch` workflow.

-If using a Cromwell server, we recommend copying the outputs to a

-permanent location by adding the following option to the

-[workflow configuration](https://cromwell.readthedocs.io/en/latest/wf_options/Overview/)

-file:

-

-```json

-"final_workflow_outputs_dir" : "gs://my-outputs-bucket",

-"use_relative_output_paths": false,

-```

-

-Here is an example of how to generate workflow input jsons from `GATKSVPipelineBatch` workflow metadata:

-

-1. Get metadata from Cromwshell.

-

- ```shell

- cromshell -t60 metadata 38c65ca4-2a07-4805-86b6-214696075fef > metadata.json

- ```

-

-2. Run the script.

-

- ```shell

- python scripts/inputs/create_test_batch.py \

- --execution-bucket gs://my-exec-bucket \

- --final-workflow-outputs-dir gs://my-outputs-bucket \

- metadata.json \

- > inputs/values/my_ref_panel.json

- ```

-

-3. Build test files for batched workflows (google cloud project id required).

-

- ```shell

- python scripts/inputs/build_inputs.py \

- inputs/values \

- inputs/templates/test \

- inputs/build/my_ref_panel/test \

- -a '{ "test_batch" : "ref_panel_1kg" }'

- ```

-

-4. Build test files for the single-sample workflow

-

- ```shell

- python scripts/inputs/build_inputs.py \

- inputs/values \

- inputs/templates/test/GATKSVPipelineSingleSample \

- inputs/build/NA19240/test_my_ref_panel \

- -a '{ "single_sample" : "test_single_sample_NA19240", "ref_panel" : "my_ref_panel" }'

- ```

-

-5. Build files for a Terra workspace.

-

- ```shell

- python scripts/inputs/build_inputs.py \

- inputs/values \

- inputs/templates/terra_workspaces/single_sample \

- inputs/build/NA12878/terra_my_ref_panel \

- -a '{ "single_sample" : "test_single_sample_NA12878", "ref_panel" : "my_ref_panel" }'

- ```

-

-Note that the inputs to `GATKSVPipelineBatch` may be used as resources

-for the reference panel and therefore should also be in a permanent location.

diff --git a/website/docs/advanced/build_ref_panel.md b/website/docs/advanced/build_ref_panel.md

new file mode 100644

index 000000000..263a874fd

--- /dev/null

+++ b/website/docs/advanced/build_ref_panel.md

@@ -0,0 +1,73 @@

+---

+title: Building reference panels

+description: Building reference panels for the single-sample pipeline

+sidebar_position: 4

+slug: build_ref_panel

+---

+

+A custom reference panel for the [single-sample mode](/docs/gs/calling_modes#single-sample-mode) can be generated most easily using the

+[GATKSVPipelineBatch](https://github.com/broadinstitute/gatk-sv/blob/main/wdl/GATKSVPipelineBatch.wdl) workflow.

+This must be run on a standalone Cromwell server, as the workflow is unstable on Terra.

+

+:::note

+Reference panels can also be generated by running the pipeline through joint calling on Terra, but there is

+currently no solution for automatically updating inputs.

+:::

+

+We recommend copying the outputs from a Cromwell run to a permanent location by adding the following option to

+the workflow configuration file:

+```

+ "final_workflow_outputs_dir" : "gs://my-outputs-bucket",

+ "use_relative_output_paths": false,

+```

+

+Here is an example of how to generate workflow input jsons from `GATKSVPipelineBatch` workflow metadata:

+

+1. Get metadata from Cromwshell.

+

+ ```shell

+ cromshell -t60 metadata 38c65ca4-2a07-4805-86b6-214696075fef > metadata.json

+ ```

+

+2. Run the script.

+

+ ```shell

+ python scripts/inputs/create_test_batch.py \

+ --execution-bucket gs://my-exec-bucket \

+ --final-workflow-outputs-dir gs://my-outputs-bucket \

+ metadata.json \

+ > inputs/values/my_ref_panel.json

+ ```

+

+3. Build test files for batched workflows (google cloud project id required).

+

+ ```shell

+ python scripts/inputs/build_inputs.py \

+ inputs/values \

+ inputs/templates/test \

+ inputs/build/my_ref_panel/test \

+ -a '{ "test_batch" : "ref_panel_1kg" }'

+ ```

+

+4. Build test files for the single-sample workflow

+

+ ```shell

+ python scripts/inputs/build_inputs.py \

+ inputs/values \

+ inputs/templates/test/GATKSVPipelineSingleSample \

+ inputs/build/NA19240/test_my_ref_panel \

+ -a '{ "single_sample" : "test_single_sample_NA19240", "ref_panel" : "my_ref_panel" }'

+ ```

+

+5. Build files for a Terra workspace.

+

+ ```shell

+ python scripts/inputs/build_inputs.py \

+ inputs/values \

+ inputs/templates/terra_workspaces/single_sample \

+ inputs/build/NA12878/terra_my_ref_panel \

+ -a '{ "single_sample" : "test_single_sample_NA12878", "ref_panel" : "my_ref_panel" }'

+ ```

+

+Note that the inputs to `GATKSVPipelineBatch` may be used as resources

+for the reference panel and therefore should also be in a permanent location.

diff --git a/website/docs/advanced/development/_category_.json b/website/docs/advanced/cromwell/_category_.json

similarity index 53%

rename from website/docs/advanced/development/_category_.json

rename to website/docs/advanced/cromwell/_category_.json

index c924cd2ab..6efde5537 100644

--- a/website/docs/advanced/development/_category_.json

+++ b/website/docs/advanced/cromwell/_category_.json

@@ -1,6 +1,6 @@

{

- "label": "Development",

- "position": 6,

+ "label": "Cromwell",

+ "position": 1,

"link": {

"type": "generated-index"

}

diff --git a/website/docs/advanced/development/cromwell.md b/website/docs/advanced/cromwell/overview.md

similarity index 97%

rename from website/docs/advanced/development/cromwell.md

rename to website/docs/advanced/cromwell/overview.md

index f9c4ef3d2..84ff94ae4 100644

--- a/website/docs/advanced/development/cromwell.md

+++ b/website/docs/advanced/cromwell/overview.md

@@ -1,6 +1,6 @@

---

-title: Cromwell

-description: Running GATK-SV on Cromwell

+title: Overview

+description: Introduction to Cromwell

sidebar_position: 0

---

diff --git a/website/docs/gs/quick_start.md b/website/docs/advanced/cromwell/quick_start.md

similarity index 93%

rename from website/docs/gs/quick_start.md

rename to website/docs/advanced/cromwell/quick_start.md

index b225f7837..a280241bc 100644

--- a/website/docs/gs/quick_start.md

+++ b/website/docs/advanced/cromwell/quick_start.md

@@ -1,18 +1,16 @@

---

-title: Quick Start

-description: Run the pipeline on demo data.

+title: Run

+description: Running GATK-SV on Cromwell

sidebar_position: 1

slug: ./qs

---

-This page provides steps for running the pipeline using demo data.

-

# Quick Start on Cromwell

This section walks you through the steps of running pipeline using

demo data on a managed Cromwell server.

-### Setup Environment

+### Environment Setup

- A running instance of a Cromwell server.

diff --git a/website/docs/advanced/docker/_category_.json b/website/docs/advanced/docker/_category_.json

index e88fd355f..7d3dfa426 100644

--- a/website/docs/advanced/docker/_category_.json

+++ b/website/docs/advanced/docker/_category_.json

@@ -1,6 +1,6 @@

{

- "label": "Docker Images",

- "position": 7,

+ "label": "Docker builds",

+ "position": 2,

"link": {

"type": "generated-index"

}

diff --git a/website/docs/best_practices.md b/website/docs/best_practices.md

new file mode 100644

index 000000000..4c0695d58

--- /dev/null

+++ b/website/docs/best_practices.md

@@ -0,0 +1,18 @@

+---

+title: Best Practices Guide

+description: Guide for using GATK-SV

+sidebar_position: 4

+---

+

+A comprehensive guide for the single-sample calling mode is available in [GATK Best Practices for Structural Variation

+Discovery on Single Samples](https://gatk.broadinstitute.org/hc/en-us/articles/9022653744283-GATK-Best-Practices-for-Structural-Variation-Discovery-on-Single-Samples).

+This material covers basic concepts of structural variant calling, specifics of SV VCF formatting, and

+advanced troubleshooting that also apply to the joint calling mode as well. This guide is intended to supplement

+documentation found here.

+

+Users should also review the [Getting Started](/docs/gs/overview) section before attempting to perform SV calling.

+

+The following sections also contain recommendations pertaining to data and call set QC:

+

+- Preliminary sample QC in the [EvidenceQc module](/docs/modules/eqc#preliminary-sample-qc).

+- Assessment of completed call sets can be found on the [MainVcfQc module page](/docs/modules/mvqc).

diff --git a/website/docs/run/_category_.json b/website/docs/execution/_category_.json

similarity index 73%

rename from website/docs/run/_category_.json

rename to website/docs/execution/_category_.json

index eb46f4d4c..f6b285b53 100644

--- a/website/docs/run/_category_.json

+++ b/website/docs/execution/_category_.json

@@ -1,5 +1,5 @@

{

- "label": "Run",

+ "label": "Execution",

"position": 4,

"link": {

"type": "generated-index"

diff --git a/website/docs/execution/joint.md b/website/docs/execution/joint.md

new file mode 100644

index 000000000..176dfbd07

--- /dev/null

+++ b/website/docs/execution/joint.md

@@ -0,0 +1,323 @@

+---

+title: Joint calling

+description: Run the pipeline on a cohort

+sidebar_position: 4

+slug: joint

+---

+

+## Terra workspace

+Users should clone the Terra joint calling workspace (TODO)

+which is configured with a demo sample set.

+Refer to the following sections for instructions on how to run the pipeline on your data using this workspace.

+

+### Default data

+The demonstration data in this workspace is 312 publicly-available 1000 Genomes Project samples from the

+[NYGC/AnVIL high coverage data set](https://app.terra.bio/#workspaces/anvil-datastorage/1000G-high-coverage-2019),

+divided into two equally-sized batches.

+

+## Pipeline Expectations

+### What does it do?

+This pipeline performs structural variation discovery from CRAMs, joint genotyping, and variant resolution on a cohort

+of samples.

+

+### Required inputs

+The following inputs must be provided for each sample in the cohort, via the sample table described in **Workspace

+Setup** step 2:

+

+|Input Type|Input Name|Description|

+|---------|--------|--------------|

+|`String`|`sample_id`|Case sample identifier*|

+|`File`|`bam_or_cram_file`|Path to the GCS location of the input CRAM or BAM file.|

+

+*See **Sample ID requirements** below for specifications.

+

+The following cohort-level or batch-level inputs are also required:

+

+|Input Type|Input Name|Description|

+|---------|--------|--------------|

+|`String`|`sample_set_id`|Batch identifier|

+|`String`|`sample_set_set_id`|Cohort identifier|

+|`File`|`cohort_ped_file`|Path to the GCS location of a family structure definitions file in [PED format](/docs/gs/inputs#ped-format).|

+

+### Pipeline outputs

+

+The following are the main pipeline outputs. For more information on the outputs of each module, refer to the

+[Modules section](/docs/category/modules).

+

+|Output Type|Output Name|Description|

+|---------|--------|--------------|

+|`File`|`annotated_vcf`|Annotated SV VCF for the cohort***|

+|`File`|`annotated_vcf_idx`|Index for `annotated_vcf`|

+|`File`|`sv_vcf_qc_output`|QC plots (bundled in a .tar.gz file)|

+

+***Note that this VCF is not filtered

+

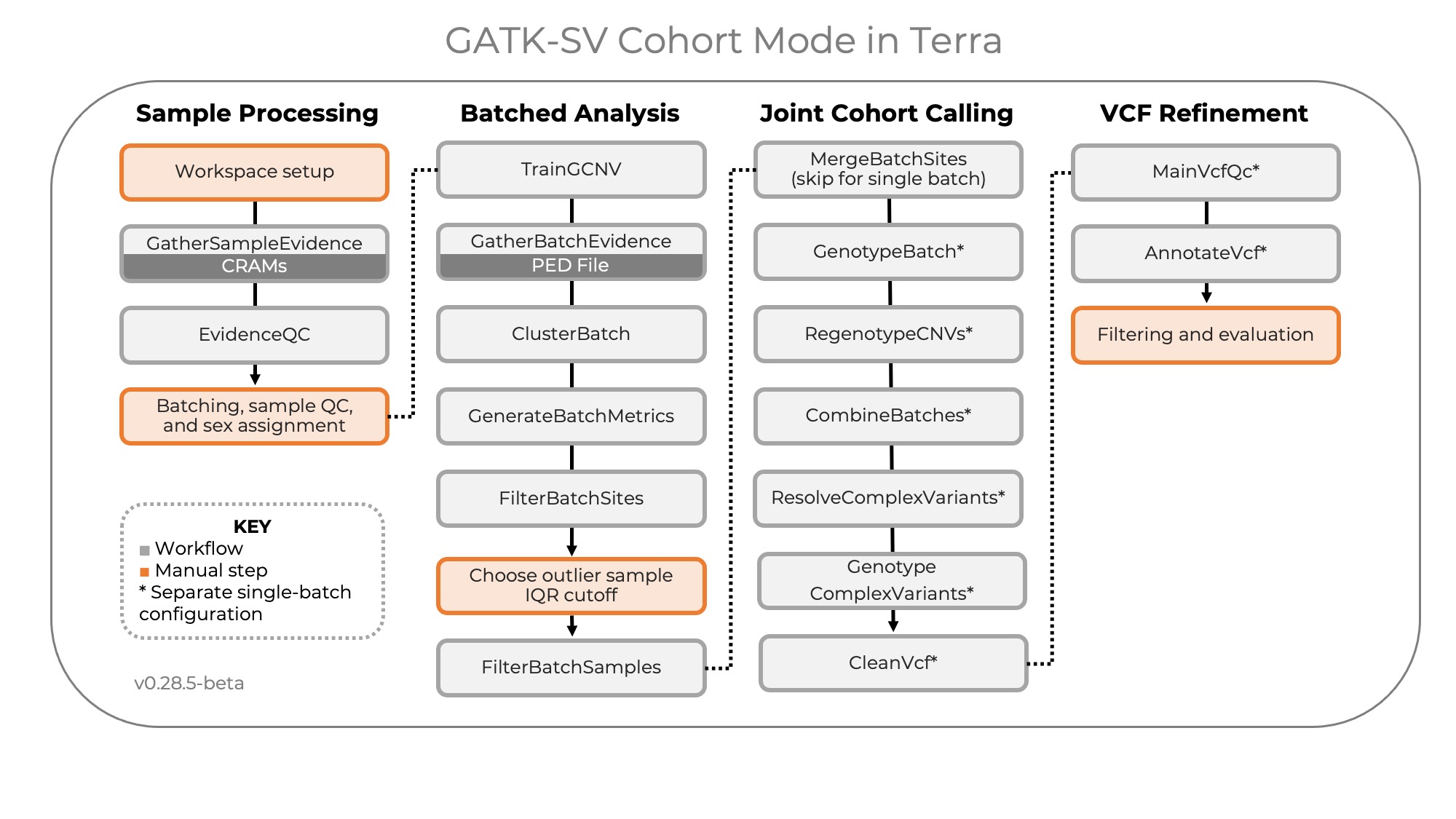

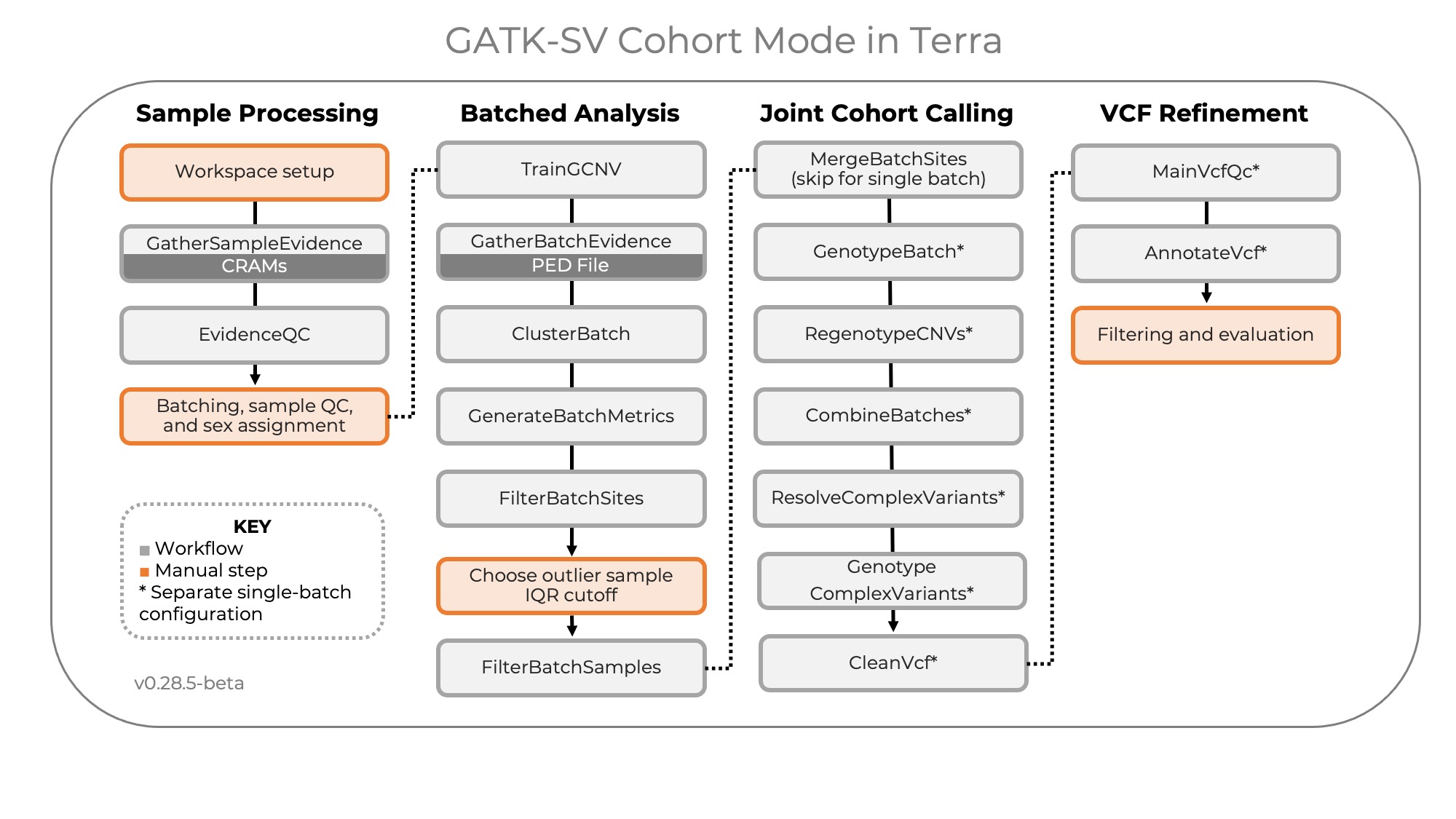

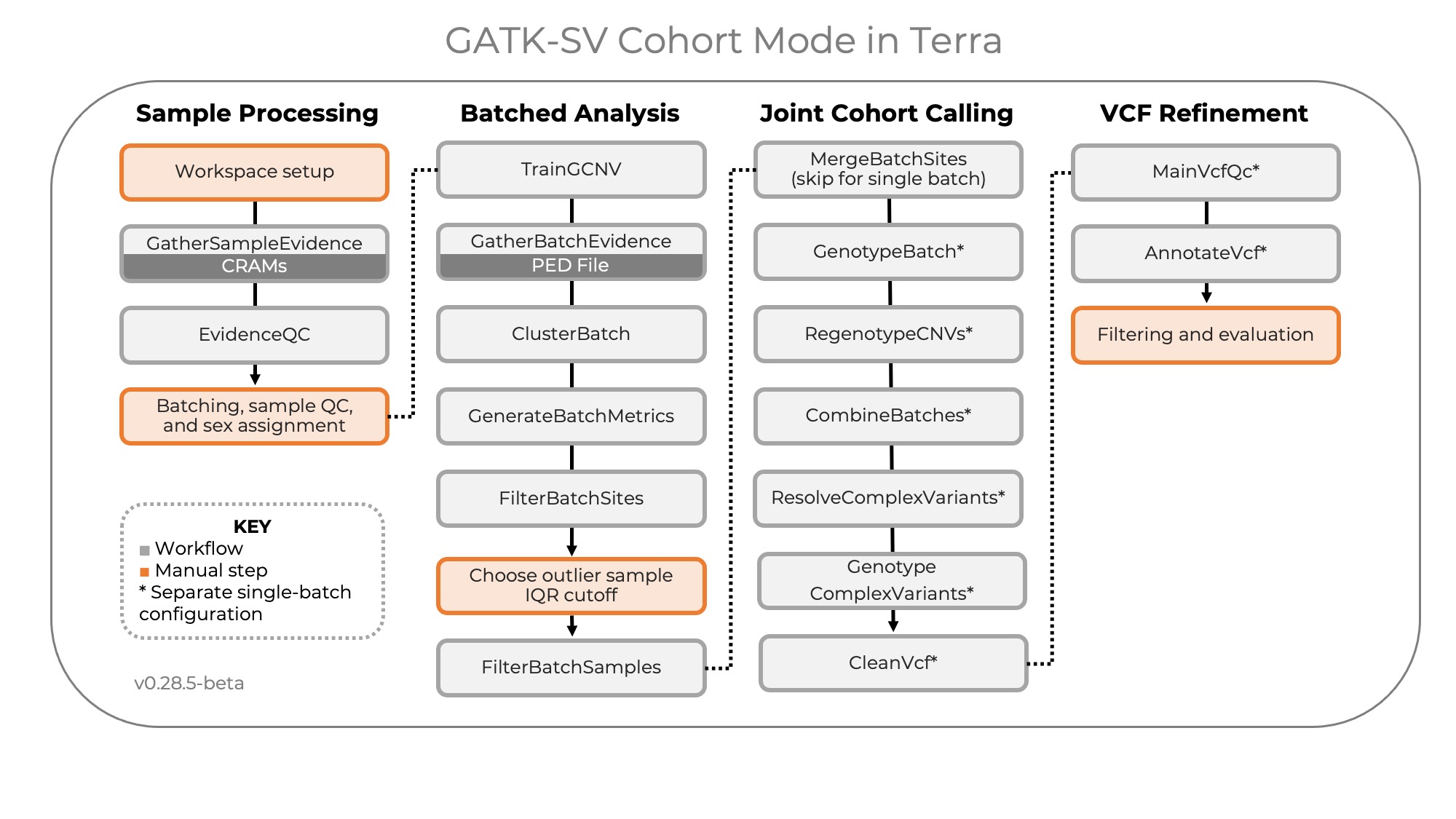

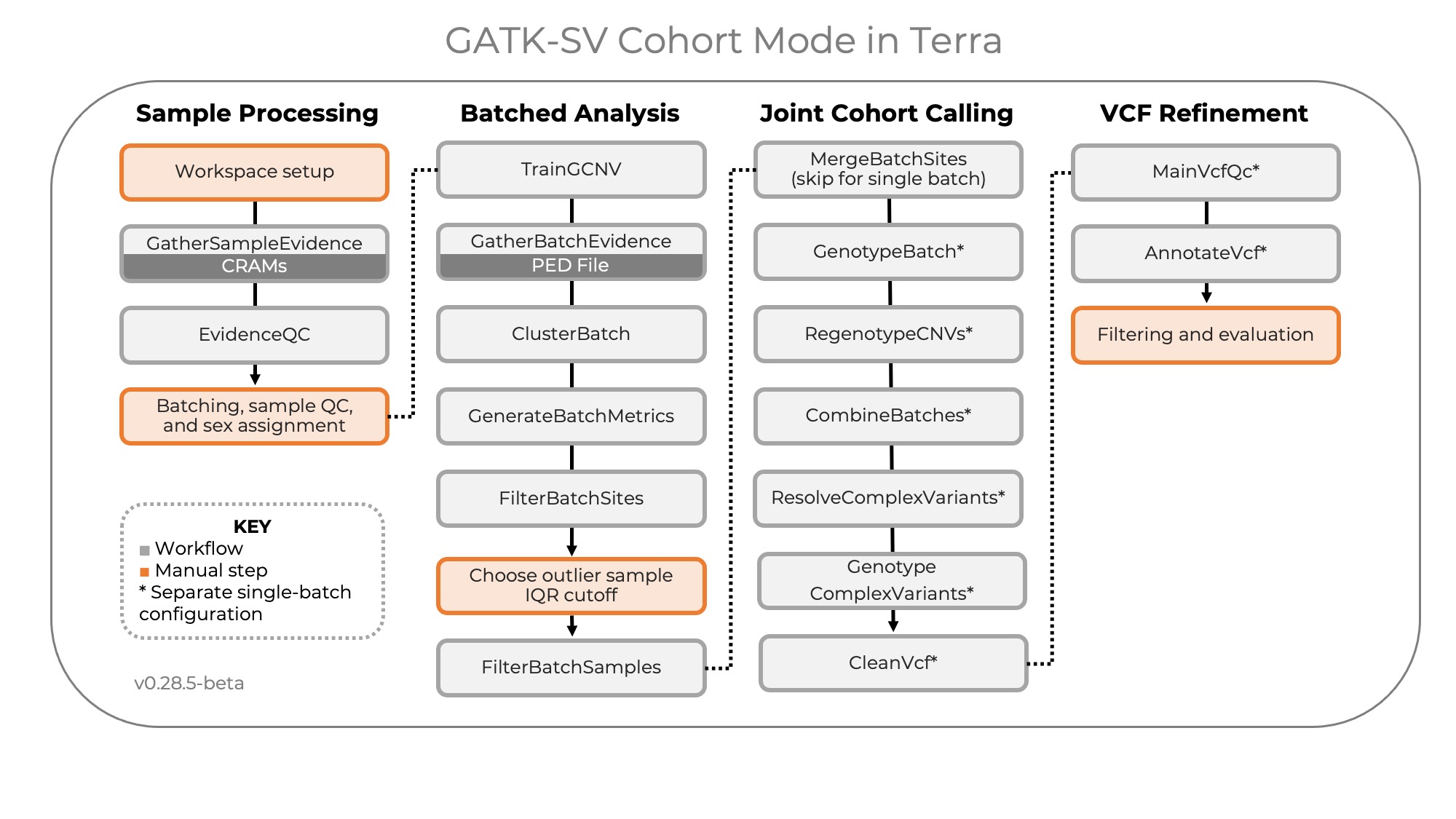

+### Pipeline overview

+

+ +

+The following workflows are included in this workspace, to be executed in this order:

+

+1. `01-GatherSampleEvidence`: Per-sample SV evidence collection, including calls from a configurable set of

+algorithms (Manta, MELT, and Wham), read depth (RD), split read positions (SR), and discordant pair positions (PE).

+2. `02-EvidenceQC`: Dosage bias scoring and ploidy estimation, run on preliminary batches

+3. `03-TrainGCNV`: Per-batch training of a gCNV model for use in `04-GatherBatchEvidence`

+4. `04-GatherBatchEvidence`: Per-batch copy number variant calling using cn.MOPS and GATK gCNV; B-allele frequency (BAF)

+generation; call and evidence aggregation

+5. `05-ClusterBatch`: Per-batch variant clustering

+6. `06-GenerateBatchMetrics`: Per-batch variant filtering, metric generation

+7. `07-FilterBatchSites`: Per-batch variant filtering and plot SV counts per sample per SV type to enable choice of IQR

+cutoff for outlier filtration in `08-FilterBatchSamples`

+8. `08-FilterBatchSamples`: Per-batch outlier sample filtration

+9. `09-MergeBatchSites`: Site merging of SVs discovered across batches, run on a cohort-level `sample_set_set`

+10. `10-GenotypeBatch`: Per-batch genotyping of all sites in the cohort

+11. `11-RegenotypeCNVs`: Cohort-level genotype refinement of some depth calls

+12. `12-CombineBatches`: Cohort-level cross-batch integration and clustering

+13. `13-ResolveComplexVariants`: Complex variant resolution

+14. `14-GenotypeComplexVariants`: Complex variant re-genotyping

+15. `15-CleanVcf`: VCF cleanup

+16. `16-RefineComplexVariants`: Complex variant filtering and refinement

+17. `17-ApplyManualVariantFilter`: Hard filtering high-FP SV classes

+18. `18-JoinRawCalls`: Raw call aggregation

+19. `19-SVConcordance`: Annotate genotype concordance with raw calls

+20. `20-FilterGenotypes`: Genotype filtering

+21. `21-AnnotateVcf`: Cohort VCF annotations, including functional annotation, allele frequency (AF) annotation, and

+AF annotation with external population callsets

+

+Extra workflows (Not part of canonical pipeline, but included for your convenience. May require manual configuration):

+* `MainVcfQc`: Generate detailed call set QC plots

+* `PlotSVCountsPerSample`: Plot SV counts per sample per SV type. Recommended to run before `FilterOutlierSamples`

+ (configured with the single VCF you want to filter) to enable IQR cutoff choice.

+* `FilterOutlierSamples`: Filter outlier samples (in terms of SV counts) from a single VCF.

+* `VisualizeCnvs`: Plot multi-sample depth profiles for CNVs

+

+For detailed instructions on running the pipeline in Terra, see [workflow instructions](#instructions) below.

+

+#### What is the maximum number of samples the pipeline can handle?

+

+In Terra, we have tested batch sizes of up to 500 samples and cohort sizes (consisting of multiple batches) of up to

+11,000 samples (and 98,000 samples with the final steps split by chromosome). On a dedicated Cromwell server, we have

+tested the pipeline on cohorts of up to ~140,000 samples.

+

+

+### Time and cost estimates

+

+The following estimates pertain to the 1000 Genomes sample data in this workspace. They represent aggregated run time

+and cost across modules for the whole pipeline. For workflows run multiple times (on each sample or on each batch),

+the longest individual runtime was used. Call caching may affect some of this information.

+

+|Number of samples|Time|Total run cost|Per-sample run cost|

+|--------------|--------|----------|----------|

+|312|~76 hours|~$675|~$2.16/sample|

+

+Please note that sample characteristics, cohort size, and level of filtering may influence pipeline compute costs,

+with average costs ranging between $2-$3 per sample. For instance, PCR+ samples and samples with a high percentage

+of improperly paired reads have been observed to cost more. Consider

+[excluding low-quality samples](/docs/gs/inputs#sample-exclusion) prior to processing to keep costs low.

+

+### Sample ID format

+

+Refer to [the sample ID requirements section](/docs/gs/inputs#sampleids) of the documentation.

+

+### Workspace setup

+

+1. Clone this workspace into a Terra project to which you have access

+

+2. In your new workspace, delete the example data. To do this, go to the *Data* tab of the workspace. Delete the data

+ tables in this order: `sample_set_set`, `sample_set`, and `sample`. For each table, click the 3 dots icon to the

+ right of the table name and click "Delete table". Confirm when prompted.

+

+

+The following workflows are included in this workspace, to be executed in this order:

+

+1. `01-GatherSampleEvidence`: Per-sample SV evidence collection, including calls from a configurable set of

+algorithms (Manta, MELT, and Wham), read depth (RD), split read positions (SR), and discordant pair positions (PE).

+2. `02-EvidenceQC`: Dosage bias scoring and ploidy estimation, run on preliminary batches

+3. `03-TrainGCNV`: Per-batch training of a gCNV model for use in `04-GatherBatchEvidence`

+4. `04-GatherBatchEvidence`: Per-batch copy number variant calling using cn.MOPS and GATK gCNV; B-allele frequency (BAF)

+generation; call and evidence aggregation

+5. `05-ClusterBatch`: Per-batch variant clustering

+6. `06-GenerateBatchMetrics`: Per-batch variant filtering, metric generation

+7. `07-FilterBatchSites`: Per-batch variant filtering and plot SV counts per sample per SV type to enable choice of IQR

+cutoff for outlier filtration in `08-FilterBatchSamples`

+8. `08-FilterBatchSamples`: Per-batch outlier sample filtration

+9. `09-MergeBatchSites`: Site merging of SVs discovered across batches, run on a cohort-level `sample_set_set`

+10. `10-GenotypeBatch`: Per-batch genotyping of all sites in the cohort

+11. `11-RegenotypeCNVs`: Cohort-level genotype refinement of some depth calls

+12. `12-CombineBatches`: Cohort-level cross-batch integration and clustering

+13. `13-ResolveComplexVariants`: Complex variant resolution

+14. `14-GenotypeComplexVariants`: Complex variant re-genotyping

+15. `15-CleanVcf`: VCF cleanup

+16. `16-RefineComplexVariants`: Complex variant filtering and refinement

+17. `17-ApplyManualVariantFilter`: Hard filtering high-FP SV classes

+18. `18-JoinRawCalls`: Raw call aggregation

+19. `19-SVConcordance`: Annotate genotype concordance with raw calls

+20. `20-FilterGenotypes`: Genotype filtering

+21. `21-AnnotateVcf`: Cohort VCF annotations, including functional annotation, allele frequency (AF) annotation, and

+AF annotation with external population callsets

+

+Extra workflows (Not part of canonical pipeline, but included for your convenience. May require manual configuration):

+* `MainVcfQc`: Generate detailed call set QC plots

+* `PlotSVCountsPerSample`: Plot SV counts per sample per SV type. Recommended to run before `FilterOutlierSamples`

+ (configured with the single VCF you want to filter) to enable IQR cutoff choice.

+* `FilterOutlierSamples`: Filter outlier samples (in terms of SV counts) from a single VCF.

+* `VisualizeCnvs`: Plot multi-sample depth profiles for CNVs

+

+For detailed instructions on running the pipeline in Terra, see [workflow instructions](#instructions) below.

+

+#### What is the maximum number of samples the pipeline can handle?

+

+In Terra, we have tested batch sizes of up to 500 samples and cohort sizes (consisting of multiple batches) of up to

+11,000 samples (and 98,000 samples with the final steps split by chromosome). On a dedicated Cromwell server, we have

+tested the pipeline on cohorts of up to ~140,000 samples.

+

+

+### Time and cost estimates

+

+The following estimates pertain to the 1000 Genomes sample data in this workspace. They represent aggregated run time

+and cost across modules for the whole pipeline. For workflows run multiple times (on each sample or on each batch),

+the longest individual runtime was used. Call caching may affect some of this information.

+

+|Number of samples|Time|Total run cost|Per-sample run cost|

+|--------------|--------|----------|----------|

+|312|~76 hours|~$675|~$2.16/sample|

+

+Please note that sample characteristics, cohort size, and level of filtering may influence pipeline compute costs,

+with average costs ranging between $2-$3 per sample. For instance, PCR+ samples and samples with a high percentage

+of improperly paired reads have been observed to cost more. Consider

+[excluding low-quality samples](/docs/gs/inputs#sample-exclusion) prior to processing to keep costs low.

+

+### Sample ID format

+

+Refer to [the sample ID requirements section](/docs/gs/inputs#sampleids) of the documentation.

+

+### Workspace setup

+

+1. Clone this workspace into a Terra project to which you have access

+

+2. In your new workspace, delete the example data. To do this, go to the *Data* tab of the workspace. Delete the data

+ tables in this order: `sample_set_set`, `sample_set`, and `sample`. For each table, click the 3 dots icon to the

+ right of the table name and click "Delete table". Confirm when prompted.

+  +

+3. Create and upload a new sample data table for your samples. This should be a tab-separated file (.tsv) with one line

+ per sample, as well as a header (first) line. It should contain the columns `entity:sample_id` (first column) and

+ `bam_or_cram_file` at minimum. See the **Required inputs** section above for more information on these inputs. For

+ an example sample data table, refer to the sample data table for the 1000 Genomes samples in this workspace

+ [here in the GATK-SV GitHub repository](https://github.com/broadinstitute/gatk-sv/blob/master/input_templates/terra_workspaces/cohort_mode/samples_1kgp.tsv.tmpl).

+ To upload the TSV file, navigate to the *Data* tab of the workspace, click the `Import Data` button on the top left,

+ and select "Upload TSV".

+

+

+3. Create and upload a new sample data table for your samples. This should be a tab-separated file (.tsv) with one line

+ per sample, as well as a header (first) line. It should contain the columns `entity:sample_id` (first column) and

+ `bam_or_cram_file` at minimum. See the **Required inputs** section above for more information on these inputs. For

+ an example sample data table, refer to the sample data table for the 1000 Genomes samples in this workspace

+ [here in the GATK-SV GitHub repository](https://github.com/broadinstitute/gatk-sv/blob/master/input_templates/terra_workspaces/cohort_mode/samples_1kgp.tsv.tmpl).

+ To upload the TSV file, navigate to the *Data* tab of the workspace, click the `Import Data` button on the top left,

+ and select "Upload TSV".

+  +

+4. Edit the `cohort_ped_file` item in the Workspace Data table (as shown in the screenshot below) to provide the Google

+ URI to the PED file for your cohort (make sure to share it with your Terra proxy account!).

+

+

+4. Edit the `cohort_ped_file` item in the Workspace Data table (as shown in the screenshot below) to provide the Google

+ URI to the PED file for your cohort (make sure to share it with your Terra proxy account!).

+  +

+

+### Creating sample_sets

+

+To create batches (in the `sample_set` table), the easiest way is to upload a tab-separated sample set membership file.

+This file should have one line per sample, plus a header (first) line. The first column should be

+`membership:sample_set_id` (containing the `sample_set_id` for the sample in question), and the second should be

+`sample` (containing the sample IDs). Recall that batch IDs (`sample_set_id`) should follow the

+[sample ID requirements](/docs/gs/inputs#sampleids). For an example sample membership file, refer to the one for the

+1000 Genomes samples in this workspace [here in the GATK-SV GitHub repository](https://github.com/broadinstitute/gatk-sv/blob/master/input_templates/terra_workspaces/cohort_mode/sample_set_membership_1kgp.tsv.tmpl).

+

+## Workflow instructions {#instructions}

+

+### General recommendations

+

+* It is recommended to run each workflow first on one sample/batch to check that the method is properly configured

+before you attempt to process all of your data.

+* We recommend enabling call-caching (on by default in each workflow configuration).

+* We recommend enabling automatic intermediate file deletion by checking the box labeled "Delete intermediate outputs"

+at the top of the workflow launch page every time you start a workflow. With this option enabled, intermediate files

+(those not present in the Terra data table, and not needed for any further GATK-SV processing) will be deleted

+automatically if the workflow succeeds. If the workflow fails, the outputs will be retained to enable a re-run to

+pick up where it left off with call-caching. However, call-caching will not be possible for workflows that have

+succeeded. For more information on this option, see

+[this article](https://terra.bio/delete-intermediates-option-now-available-for-workflows-in-terra/). For guidance on

+managing intermediate storage from failed workflows, or from workflows without the delete intermediates option enabled,

+see the next bullet point.

+* There are cases when you may need to manage storage in other ways: for workflows that failed (only delete files from

+a failed workflow after a version has succeeded, to avoid disabling call-caching), for workflows without intermediate

+file deletion enabled, or once you are done processing and want to delete files from earlier steps in the pipeline

+that you no longer need.

+ * One option is to manually delete large files, or directories containing failed workflow intermediates (after

+ re-running the workflow successfully to take advantage of call-caching) with the command

+ `gsutil -m rm gs://path/to/workflow/directory/**file_extension_to_delete` to delete all files with the given extension

+ for that workflow, or `gsutil -m rm -r gs://path/to/workflow/directory/` to delete an entire workflow directory

+ (only after you are done with all the files!). Note that this can take a very long time for larger workflows, which

+ may contain thousands of files.

+ * Another option is to use the `fiss mop` API call to delete all files that do not appear in one of the Terra data

+ tables (intermediate files). Always ensure that you are completely done with a step and you will not need to return

+ before using this option, as it will break call-caching. See

+ [this blog post](https://terra.bio/deleting-intermediate-workflow-outputs/) for more details. This can also be done

+ [via the command line](https://github.com/broadinstitute/fiss/wiki/MOP:-reducing-your-cloud-storage-footprint).

+* If your workflow fails, check the job manager for the error message. Most issues can be resolved by increasing the

+memory or disk. Do not delete workflow log files until you are done troubleshooting. If call-caching is enabled, do not

+delete any files from the failed workflow until you have run it successfully.

+* To display run costs, see [this article](https://support.terra.bio/hc/en-us/articles/360037862771#h_01EX5ED53HAZ59M29DRCG24CXY)

+for one-time setup instructions for non-Broad users.

+

+### 01-GatherSampleEvidence

+

+Read the full GatherSampleEvidence documentation [here](/docs/modules/gse).

+* This workflow runs on a per-sample level, but you can launch many (a few hundred) samples at once, in arbitrary

+partitions. Make sure to try just one sample first though!

+* Refer to the [Input Data section](/docs/gs/inputs) for details on file formats, sample QC, and sample ID restrictions.

+* It is normal for a few samples in a cohort to run out of memory during Wham SV calling, so we recommend enabling

+auto-retry for out-of-memory errors for `01-GatherSampleEvidence` only. Before you launch the workflow, click the

+checkbox reading "Retry with more memory" and set the memory retry factor to 1.8. This action must be performed each

+time you launch a `01-GatherSampleEvidence` job.

+* Please note that most large published joint call sets produced by GATK-SV, including gnomAD-SV, included the tool

+MELT, a state-of-the-art mobile element insertion (MEI) detector, as part of the pipeline. Due to licensing

+restrictions, we cannot provide a public docker image for this algorithm. The `01-GatherSampleEvidence` workflow

+does not use MELT as one of the SV callers by default, which will result in less sensitivity to MEI calls. In order

+to use MELT, you will need to build your own private docker image (example Dockerfile

+[here](https://github.com/broadinstitute/gatk-sv/blob/master/dockerfiles/melt/Dockerfile)), share it with your Terra

+proxy account, enter it in the `melt_docker` input in the `01-GatherSampleEvidence` configuration (as a string,

+surrounded by double-quotes), and then click "Save". No further changes are necessary beyond `01-GatherSampleEvidence`.

+ * Note that the version of MELT tested with GATK-SV is v2.0.5. If you use a different version to create your own

+ docker image, we recommend testing your image by running one pilot sample through `01-GatherSampleEvidence` to check

+ that it runs as expected, then running a small group of about 10 pilot samples through the pipeline until the end of

+ `04-GatherBatchEvidence` to check that the outputs are compatible with GATK-SV.

+* If you enable "Delete intermediate outputs" whenever you launch this workflow (recommended), BAM files will be

+deleted for successful runs; but BAM files will not be deleted if the run fails or if intermediate file deletion is

+not enabled. Since BAM files are large, we recommend deleting them to save on storage costs, but only after fixing and

+re-running the failed workflow, so that it will call-cache.

+

+

+### 02-EvidenceQC

+

+Read the full EvidenceQC documentation [here](/docs/modules/eqc).

+* `02-EvidenceQC` is run on arbitrary cohort partitions of up to 500 samples.

+* The outputs from `02-EvidenceQC` can be used for

+[preliminary sample QC](/docs/modules/eqc#preliminary-sample-qc) and

+[batching](#batching) before moving on to [TrainGCNV](#traingcnv).

+

+

+### Batching (manual step) {#batching}

+

+For larger cohorts, samples should be split up into batches of about 100-500

+samples with similar characteristics. We recommend batching based on overall

+coverage and dosage score (WGD), which can be generated in [EvidenceQC](/docs/modules/eqc).

+An example batching process is outlined below:

+

+1. Divide the cohort into PCR+ and PCR- samples

+2. Partition the samples by median coverage from [EvidenceQC](/docs/modules/eqc),