In current games, players see a general hand model, but in reality hand shape has a lot of variation (try it out!):

Seeing someone else’s hands move instead of your own feels uncanny and causes discomfort. As a workaround, most games apply clever tricks, like covering the player’s hands or showing gloves instead.

HandVR aims to solve this by showing personalized hand models based on the players' physical characteristics using Deep Learning.

Read more: https://medium.com/kitchen-budapest/personalized-hand-models-for-vr-bdf6d6f8fad3

This part aims to reduce the dimensionality of hand poses to 2 with Autoencoders for better interpretability and user control.

Here is such a manifold along with the positions of the training samples in the latent space:

Implemented AutoEncoders include:

- Fully Connected AE (vanilla)

- Convolutional AE (exploits adjacent joint hierarchy)

- Variational AE

- VAE GAN

Reconstruction losses of joint angles:

This dataset was created by running the OpenPose joint detector on the 11k hand dataset and checking the results by hand.

During the training of the auto encoders it is crucial to see the progress of the model. Therefore I created a tool that can render it as fast as possible.

- Using OpenGl (ModernGL)

- Face normals calculated in Compute Shader

- Skinning is done in one batch

The manifolds are added to Tensorboard during training for visualization

The MANO models are given in the PLY format, both the low poly registrations and the high poly scans.

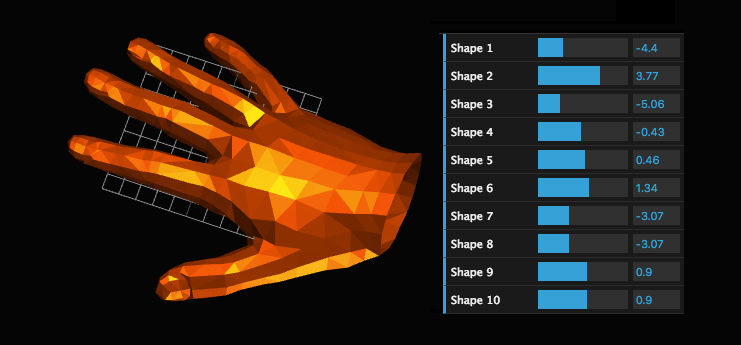

Interactive web demo for visualizing the shape parameters

The MANO model is licensed under: http://mano.is.tue.mpg.de/license