-

Notifications

You must be signed in to change notification settings - Fork 788

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Merged by Bors] - Subscribe to altair gossip topics 2 slots before fork #2532

Conversation

|

@michaelsproul can we tweak the |

Yeah I think this would fine. No fork is actually going to activate at |

4e53423 to

00bdafe

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There's a few things we probably need to consider here.

If we receive messages on a topic we are subscribed and then reject them, this is considered censoring and the gossipsub scoring algo will penalize us. The correct approach is to only subscribe to a topic when we want to receive messages.

If there is a period of time we dont want to receive messages, we should not subscribe to the topic. In this case it seems we dont want to subscribe to the topic until the fork.

If we do want to keep this current logic, it might also be nicer to filter the messages at the behaviour level. The behaviour has a fork_context so I think its possible to filter the messages as we get them, rather than sending them up to the network to do it for us.

00bdafe to

4767892

Compare

I subscribed to all topics before the fork mainly because the spec says We could also interpret it as subscribe to the post-fork topics a few epochs in advance. But we would still have to deal with the same problem of rejecting messages only for a shorter duration.

I think this is fine because a node sending us post fork messages before the fork slot is either malicious or has clock issues. So we probably wouldn't want to peer with such a node anyway.

I started out implementing this but it got ugly because the behaviour doesn't have the slot clock which we need to check if we are within We could simplify things by not accounting for With all this, I felt it was the cleanest to handle it in the network service. Please let me know if there's a better way of handling it 🙏 |

4767892 to

6e3b210

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The fork epoch stuff is looking good 👌

|

I think its still not right to subscribe to a topic and then try and downscore peers who send us information on a topic we've subscribed to. Although this may work at a lighthouse level, we're breaking a few things in gossipsub, which is designed to give you messages for which you are subscribed (i.e we will also get gossip (IHAVE messages) from random other peers subscribed to the topic if they accept them at the gossip level, and i'm worried we could be banning misc peers). If we don't want messages from a topic, we shouldn't subscribe to it. Building the mesh should be relatively quick (so we don't need much time to subscribe in advance). From reading the spec, it just says we should subscribe in advance (which I agree with) but maybe like 1 or two slots in advance of the fork. This way we don't have to worry about which topic we get messages on, we let gossipsub filter things we don't want out, simply by our subscriptions (all the gossipsub scoring logic should remain fine this way also). In terms of implementation, I've not looked closely at the gossipsub subscription timing logic. But I imagine the I could be missing something, so let me know if there's other things you think we should consider here. |

017abd5 to

406ef4f

Compare

|

@AgeManning Agree with your points above. Reverted the gossipsub filtering and added a new timer that fires We subscribe to both base and altair fork_digests if the beacon node is started between Tested all scenarios on local testnets and the simulator and it is working as expected. |

|

I ran this for the Prater Altair fork and it went well! Just for clarity, my understanding is that we're blocked on approval from @AgeManning. No rush, just wanted to make it clear. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We discussed this internally and decided we should merge this for mainnet and make a side PR to shift some of the logic into the behaviour.

|

@pawanjay176 - Could you merge in the latest unstable and resolve the conflicts, then I think we're good to go. |

|

bors r+ |

## Issue Addressed N/A ## Proposed Changes Add a fork_digest to `ForkContext` only if it is set in the config. Reject gossip messages on post fork topics before the fork happens. Edit: Instead of rejecting gossip messages on post fork topics, we now subscribe to post fork topics 2 slots before the fork. Co-authored-by: Age Manning <[email protected]>

|

Pull request successfully merged into unstable. Build succeeded: |

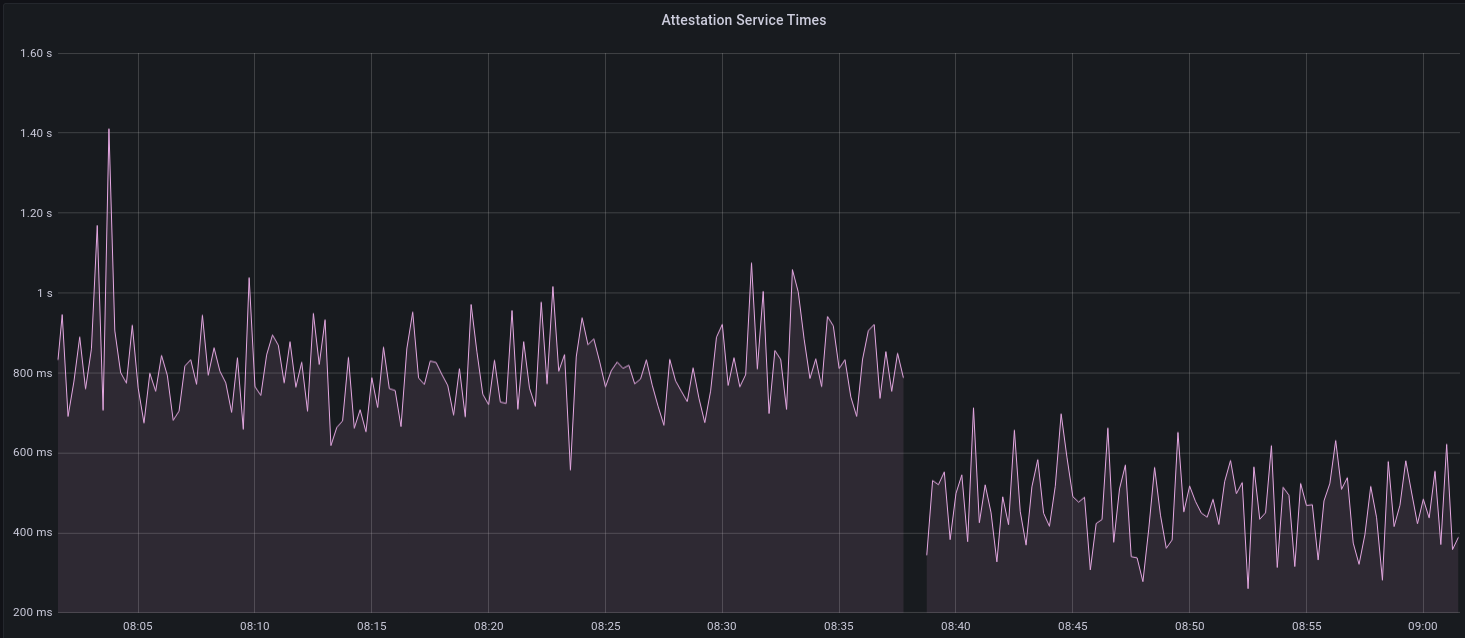

commit a73dcb7 Author: Age Manning <[email protected]> Date: Fri Sep 17 04:02:31 2021 +0000 Improved handling of IP Banning (sigp#2530) This PR in general improves the handling around peer banning. Specifically there were issues when multiple peers under a single IP connected to us after we banned the IP for poor behaviour. This PR should now handle these peers gracefully as well as make some improvements around how we previously disconnected and banned peers. The logic now goes as follows: - Once a peer gets banned, its gets registered with its known IP addresses - Once enough banned peers exist under a single IP that IP is banned - We retain connections with existing peers under this IP - Any new connections under this IP are rejected commit 64ad2af Author: Pawan Dhananjay <[email protected]> Date: Fri Sep 17 01:11:16 2021 +0000 Subscribe to altair gossip topics 2 slots before fork (sigp#2532) ## Issue Addressed N/A ## Proposed Changes Add a fork_digest to `ForkContext` only if it is set in the config. Reject gossip messages on post fork topics before the fork happens. Edit: Instead of rejecting gossip messages on post fork topics, we now subscribe to post fork topics 2 slots before the fork. Co-authored-by: Age Manning <[email protected]> commit acdcea9 Author: Age Manning <[email protected]> Date: Thu Sep 16 04:45:07 2021 +0000 Update mainnet bootnodes (sigp#2594) Sigma Prime is transitioning our mainnet bootnodes and this PR represents the transition of our bootnodes. After a few releases, old boot-nodes will be deprecated. commit 56e0615 Author: Age Manning <[email protected]> Date: Thu Sep 16 04:45:05 2021 +0000 Experimental discovery (sigp#2577) # Description A few changes have been made to discovery. In particular a custom re-write of an LRU cache which previously was read/write O(N) for all our sessions ~5k, to a more reasonable hashmap-style O(1). Further there has been reported issues in the current discv5, so added error handling to help identify the issue has been added. commit c5c7476 Author: Paul Hauner <[email protected]> Date: Thu Sep 16 03:26:33 2021 +0000 Web3Signer support for VC (sigp#2522) [EIP-3030]: https://eips.ethereum.org/EIPS/eip-3030 [Web3Signer]: https://consensys.github.io/web3signer/web3signer-eth2.html ## Issue Addressed Resolves sigp#2498 ## Proposed Changes Allows the VC to call out to a [Web3Signer] remote signer to obtain signatures. ## Additional Info ### Making Signing Functions `async` To allow remote signing, I needed to make all the signing functions `async`. This caused a bit of noise where I had to convert iterators into `for` loops. In `duties_service.rs` there was a particularly tricky case where we couldn't hold a write-lock across an `await`, so I had to first take a read-lock, then grab a write-lock. ### Move Signing from Core Executor Whilst implementing this feature, I noticed that we signing was happening on the core tokio executor. I suspect this was causing the executor to temporarily lock and occasionally trigger some HTTP timeouts (and potentially SQL pool timeouts, but I can't verify this). Since moving all signing into blocking tokio tasks, I noticed a distinct drop in the "atttestations_http_get" metric on a Prater node:  I think this graph indicates that freeing the core executor allows the VC to operate more smoothly. ### Refactor TaskExecutor I noticed that the `TaskExecutor::spawn_blocking_handle` function would fail to spawn tasks if it were unable to obtain handles to some metrics (this can happen if the same metric is defined twice). It seemed that a more sensible approach would be to keep spawning tasks, but without metrics. To that end, I refactored the function so that it would still function without metrics. There are no other changes made. ## TODO - [x] Restructure to support multiple signing methods. - [x] Add calls to remote signer from VC. - [x] Documentation - [x] Test all endpoints - [x] Test HTTPS certificate - [x] Allow adding remote signer validators via the API - [x] Add Altair support via [21.8.1-rc1](https://github.com/ConsenSys/web3signer/releases/tag/21.8.1-rc1) - [x] Create issue to start using latest version of web3signer. (See sigp#2570) ## Notes - ~~Web3Signer doesn't yet support the Altair fork for Prater. See Consensys/web3signer#423 - ~~There is not yet a release of Web3Signer which supports Altair blocks. See Consensys/web3signer#391 commit 58012f8 Author: Michael Sproul <[email protected]> Date: Wed Sep 15 00:01:18 2021 +0000 Shutdown gracefully on panic (sigp#2596) ## Proposed Changes * Modify the `TaskExecutor` so that it spawns a "monitor" future for each future spawned by `spawn` or `spawn_blocking`. This monitor future joins the handle of the child future and shuts down the executor if it detects a panic. * Enable backtraces by default by setting the environment variable `RUST_BACKTRACE`. * Spawn the `ProductionBeaconNode` on the `TaskExecutor` so that if a panic occurs during start-up it will take down the whole process. Previously we were using a raw Tokio `spawn`, but I can't see any reason not to use the executor (perhaps someone else can). ## Additional Info I considered using [`std::panic::set_hook`](https://doc.rust-lang.org/std/panic/fn.set_hook.html) to instantiate a custom panic handler, however this doesn't allow us to send a shutdown signal because `Fn` functions can't move variables (i.e. the shutdown sender) out of their environment. This also prevents it from receiving a `Logger`. Hence I decided to leave the panic handler untouched, but with backtraces turned on by default. I did a run through the code base with all the raw Tokio spawn functions disallowed by Clippy, and found only two instances where we bypass the `TaskExecutor`: the HTTP API and `InitializedValidators` in the VC. In both places we use `spawn_blocking` and handle the return value, so I figured that was OK for now. In terms of performance I think the overhead should be minimal. The monitor tasks will just get parked by the executor until their child resolves. I've checked that this covers Discv5, as the `TaskExecutor` gets injected into Discv5 here: https://github.com/sigp/lighthouse/blob/f9bba92db3468321b28ddd9010e26b359f88bafe/beacon_node/src/lib.rs#L125-L126 commit 95b1713 Author: Age Manning <[email protected]> Date: Tue Sep 14 08:28:35 2021 +0000 Reduce network debug noise (sigp#2593) The identify network debug logs can get quite noisy and are unnecessary to print on every request/response. This PR reduces debug noise by only printing messages for identify messages that offer some new information. commit 4755d4b Author: Wink Saville <[email protected]> Date: Tue Sep 14 06:48:26 2021 +0000 Update sloggers to v2.0.2 (sigp#2588) fixes sigp#2584 commit f9bba92 Author: Paul Hauner <[email protected]> Date: Mon Sep 13 23:01:19 2021 +0000 v1.5.2 (sigp#2595) ## Issue Addressed NA ## Proposed Changes Version bump ## Additional Info Please do not `bors` without my approval, I am still testing. commit e4ed42a Author: Squirrel <[email protected]> Date: Sun Sep 12 23:55:20 2021 +0000 Fix nightly bump num bigint (sigp#2591) ## Issue Addressed Builds again on latest nightly ## Proposed Changes Break was caused by: rust-lang/rust#88581 commit f7dd24c Author: Mason Stallmo <[email protected]> Date: Sat Sep 11 23:56:16 2021 +0000 Add quoted u64/u64_vec to SyncCommitteeSubscription (sigp#2589) ## Issue Addressed Resolves sigp#2582 ## Proposed Changes Use `quoted_u64` and `quoted_u64_vec` custom serde deserializers from `eth2_serde_utils` to support the proper Eth2.0 API spec for `/eth/v1/validator/sync_committee_subscriptions` ## Additional Info N/A commit 46cd67d Author: Mason Stallmo <[email protected]> Date: Sat Sep 11 06:07:20 2021 +0000 Case insensitive match for ForkName (sigp#2587) ## Issue Addressed sigp#2583 ## Proposed Changes Case insensitive match on `fork_name` when calling `ForkName::from_str` ## Additional Info N/A

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

- kdkslL

Issue Addressed

N/A

Proposed Changes

Add a fork_digest to

ForkContextonly if it is set in the config.Reject gossip messages on post fork topics before the fork happens.

Edit: Instead of rejecting gossip messages on post fork topics, we now subscribe to post fork topics 2 slots before the fork.