-

Notifications

You must be signed in to change notification settings - Fork 890

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Memory leak wherever promises supplied to Promise.race() don't settle

#3069

Labels

bug

Something isn't working

Comments

|

This problem is

|

This was referenced Aug 7, 2024

iteratorState and onErrorPromise.race() don't settle

steveluscher

added a commit

that referenced

this issue

Aug 7, 2024

# Summary If a member of `Promise.race` never settles, every promise in the race will be retained indefinitely. This causes memory leaks. See nodejs/node#17469 for much more information. In this PR we fork the excellent work of @brainkim, @danfuzz, and @szakharchenko; a ‘safe’ version of `race` that ensures that each member of the race settles when the winner settles. # Test Plan See next PR in this stack Addresses #3069.

steveluscher

added a commit

that referenced

this issue

Aug 7, 2024

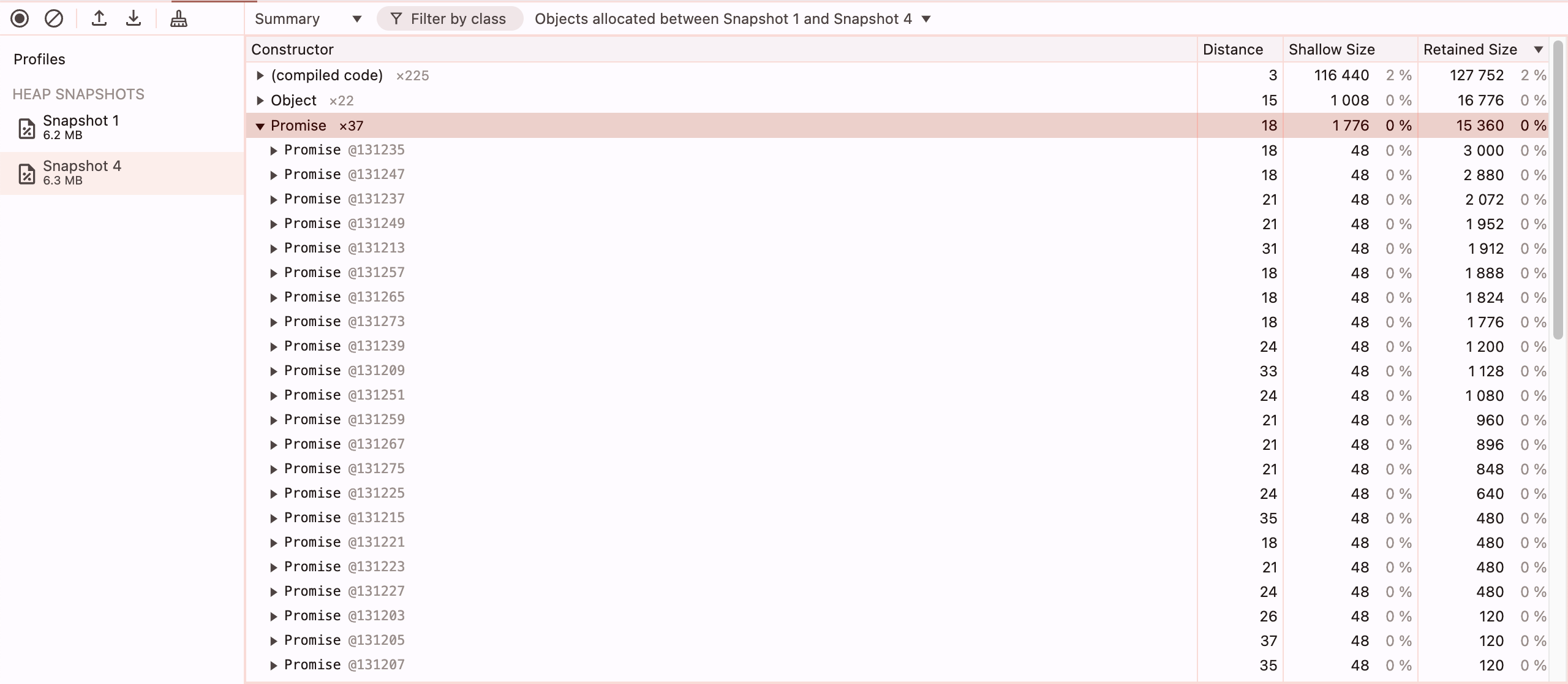

…on (#3072) # Summary Nearly everything in this library that raced two or more promises together would leak memory if some of those promises never resolved. One particularly bad case of this was in the subscriptions transport, where an ‘abort promise’ that may never actually abort would be raced with the promise for the next notification message. Unless the abort was eventually called, every promise would be retained forever and memory use would grow unbounded, eventually crashing the process. # Test Plan Given this test script: ```ts // test.mjs import { createSolanaRpcSubscriptions } from "@solana/rpc-subscriptions"; const rpcSubscriptions = createSolanaRpcSubscriptions("ws://127.0.0.1:8900"); const slotMessages = await rpcSubscriptions.slotNotifications().subscribe({ // Never aborted. abortSignal: AbortSignal.any([]), }); for await (const _n of slotMessages); console.log("bye"); ``` Run… ```shell pnpm --inspect node test.mjs ``` Then… 1. Open chrome://inspect 2. Open the ‘Memory’ tab 3. Take two heap snapshots far apart in time, clicking ‘collect garbage’ before the second one 4. Select ‘objects allocated between Snapshot 1 and Snapshot 2’ ## Before Notice that promises just keep accumulating, and the pool never stops growing.  ## After Notice that only a handful of promises are allocated, and they stay constant at ~37 promises.  A special thank you to @WilfredAlmeida for shaking down the RC version of web3.js 2.0 and finding this leak in a long-running process. Closes #3069.

|

Because there has been no activity on this issue for 7 days since it was closed, it has been automatically locked. Please open a new issue if it requires a follow up. |

Sign up for free

to subscribe to this conversation on GitHub.

Already have an account?

Sign in.

Repro:

node --inspectThe text was updated successfully, but these errors were encountered: