Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code.

What makes Semantic Kernel special, however, is its ability to automatically orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

It provides:

- abstractions for AI services (such as chat, text to images, audio to text, etc.) and memory stores

- implementations of those abstractions for services from OpenAI, Azure OpenAI, Hugging Face, local models, and more, and for a multitude of vector databases, such as those from Chroma, Qdrant, Milvus, and Azure

- a common representation for plugins, which can then be orchestrated automatically by AI

- the ability to create such plugins from a multitude of sources, including from OpenAPI specifications, prompts, and arbitrary code written in the target language

- extensible support for prompt management and rendering, including built-in handling of common formats like Handlebars and Liquid

- and a wealth of functionality layered on top of these abstractions, such as filters for responsible AI, dependency injection integration, and more.

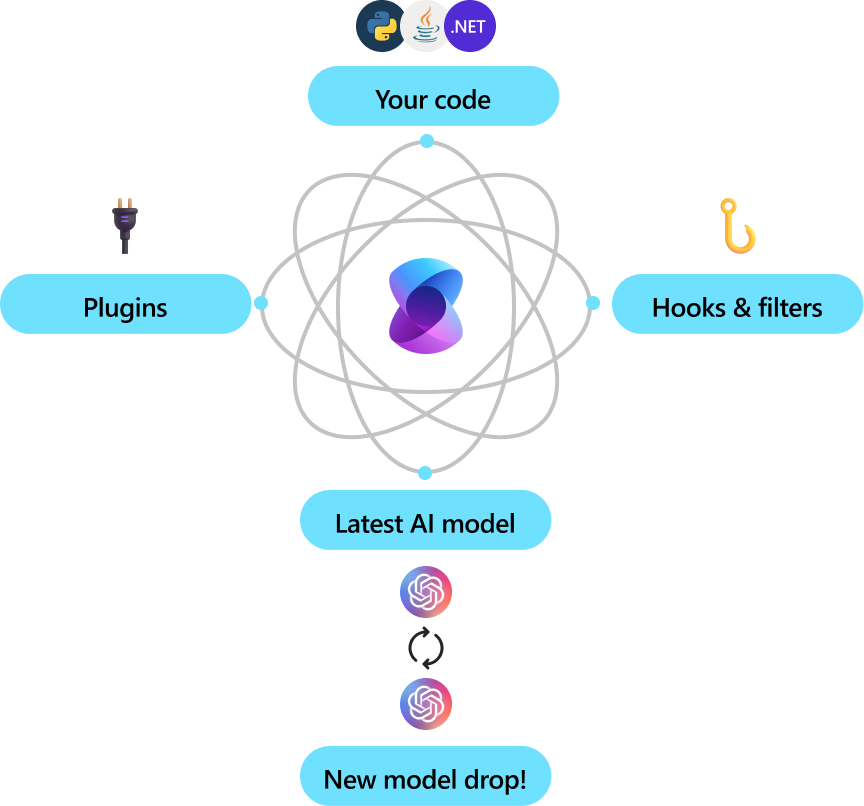

Semantic Kernel is utilized by enterprises due to its flexibility, modularity and observability. Backed with security enhancing capabilities like telemetry support, and hooks and filters so you’ll feel confident you’re delivering responsible AI solutions at scale. Semantic Kernel was designed to be future proof, easily connecting your code to the latest AI models evolving with the technology as it advances. When new models are released, you’ll simply swap them out without needing to rewrite your entire codebase.

The Semantic Kernel SDK is available in C#, Python, and Java. To get started, choose your preferred language below. See the Feature Matrix to see a breakdown of feature parity between our currently supported languages.

|

|

|

The quickest way to get started with the basics is to get an API key from either OpenAI or Azure OpenAI and to run one of the C#, Python, and Java console applications/scripts below.

- Go to the Quick start page here and follow the steps to dive in.

- After Installing the SDK, we advise you follow the steps and code detailed to write your first console app.

- Go to the Quick start page here and follow the steps to dive in.

- You'll need to ensure that you toggle to C# in the the Choose a programming language table at the top of the page.

The Java code is in the semantic-kernel-java repository. See semantic-kernel-java build for instructions on how to build and run the Java code.

Please file Java Semantic Kernel specific issues in the semantic-kernel-java repository.

The fastest way to learn how to use Semantic Kernel is with our C# and Python Jupyter notebooks. These notebooks demonstrate how to use Semantic Kernel with code snippets that you can run with a push of a button.

Once you've finished the getting started notebooks, you can then check out the main walkthroughs on our Learn site. Each sample comes with a completed C# and Python project that you can run locally.

Finally, refer to our API references for more details on the C# and Python APIs:

- C# API reference

- Python API reference

- Java API reference (coming soon)

The Semantic Kernel extension for Visual Studio Code makes it easy to design and test semantic functions. The extension provides an interface for designing semantic functions and allows you to test them with the push of a button with your existing models and data.

We welcome your contributions and suggestions to SK community! One of the easiest ways to participate is to engage in discussions in the GitHub repository. Bug reports and fixes are welcome!

For new features, components, or extensions, please open an issue and discuss with us before sending a PR. This is to avoid rejection as we might be taking the core in a different direction, but also to consider the impact on the larger ecosystem.

To learn more and get started:

-

Read the documentation

-

Learn how to contribute to the project

-

Ask questions in the GitHub discussions

-

Ask questions in the Discord community

-

Follow the team on our blog

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

Copyright (c) Microsoft Corporation. All rights reserved.

Licensed under the MIT license.