v3.0

This releases includes nn.Hardswish() activation implementation on Conv() modules, which increases mAP for all models at the expense of about 10% in inference speed. Training speeds are not significantly affected, though CUDA memory requirements increase about 10%. Training from scratch as well as finetuning both benefit from this change. The smallest models benefit the most from the Hardswish() activations, with increases of +0.9/+0.8/+0.7/[email protected]:0.95 for YOLOv5s/m/l/x.

All mAP values in our README are now reported at --img-size 640 (v2.0 reported at 672, and v1.0 reported at 736), so we've succeeded in increasing mAP while reducing the required --img-size :)

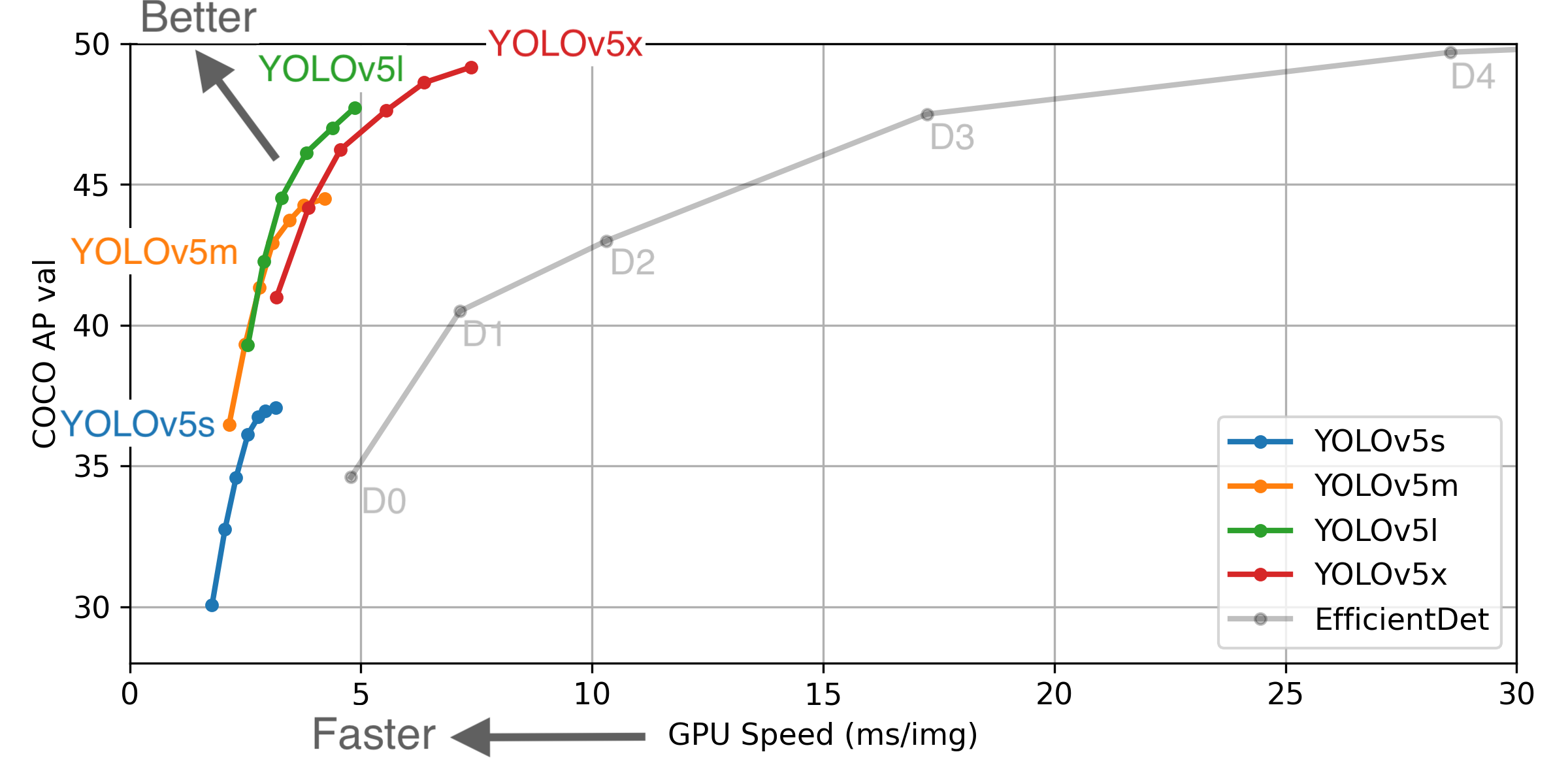

We've also listed YOLOv5x Test Time Augmentation (TTA) mAP and speeds for v3.0 in our README table for the first time (and for v2.0 below). Best results are YOLOv5x with TTA at 50.8 [email protected]:0.95. We've also updated efficientdet results in our comparison plot to reflect recent improvements in the google/automl repo.

Breaking Changes

- This release does not contain breaking changes.

- This release is only backwards compatible with v2.0 models trained with torch>=1.6.

Bug Fixes

- Hyperparameter evolution fixed, tutorial added (#607)

Added Functionality

- PyTorch 1.6 compatible.

torch>=1.6required (43a616a) - PyTorch 1.6 native Automatic Mixed Precision (AMP) replaces NVIDIA Apex AMP (#573)

nn.Hardswish()activations replacenn.LeakyReLU(0.1)in base convolution modulemodels.Conv()- Dataset Autodownload feature added (#685)

- Model Autodownload improved (#711)

- Layer freezing code added (#679)

- TensorRT export tutorial added (#623)

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from [google/automl](https://github.com/google/automl) at batch size 8.

- August 13, 2020: v3.0 release: nn.Hardswish() activations, data autodownload, native AMP.

- July 23, 2020: v2.0 release: improved model definition, training and mAP.

- June 22, 2020: PANet updates: new heads, reduced parameters, improved speed and mAP 364fcfd.

- June 19, 2020: FP16 as new default for smaller checkpoints and faster inference d4c6674.

- June 9, 2020: CSP updates: improved speed, size, and accuracy (credit to @WongKinYiu for CSP).

- May 27, 2020: Public release. YOLOv5 models are SOTA among all known YOLO implementations.

- April 1, 2020: Start development of future compound-scaled YOLOv3/YOLOv4-based PyTorch models.

Pretrained Checkpoints

v3.0 with nn.Hardswish()

| Model | APval | APtest | AP50 | SpeedGPU | FPSGPU | params | FLOPS | |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 37.0 | 37.0 | 56.2 | 2.4ms | 476 | 7.5M | 13.2B | |

| YOLOv5m | 44.3 | 44.3 | 63.2 | 3.4ms | 333 | 21.8M | 39.4B | |

| YOLOv5l | 47.7 | 47.7 | 66.5 | 4.4ms | 256 | 47.8M | 88.1B | |

| YOLOv5x | 49.2 | 49.2 | 67.7 | 6.9ms | 164 | 89.0M | 166.4B | |

| YOLOv5x + TTA | 50.8 | 50.8 | 68.9 | 25.5ms | 39 | 89.0M | 354.3B | |

| YOLOv3-SPP | 45.6 | 45.5 | 65.2 | 4.5ms | 222 | 63.0M | 118.0B |

** APtest denotes COCO test-dev2017 server results, all other AP results in the table denote val2017 accuracy.

** All AP numbers are for single-model single-scale without ensemble or test-time augmentation except for TTA. Reproduce by python test.py --data coco.yaml --img 640 --conf 0.001. Test Time Augmentation (TTA) runs at 3 image sizes. Reproduce TTA results by python test.py --data coco.yaml --img 832 --augment

** SpeedGPU measures end-to-end time per image averaged over 5000 COCO val2017 images using a GCP n1-standard-16 instance with one V100 GPU, and includes image preprocessing, PyTorch FP16 image inference at --batch-size 32 --img-size 640, postprocessing and NMS. Average NMS time included in this chart is 1-2ms/img. Reproduce by python test.py --data coco.yaml --img 640 --conf 0.1

** All checkpoints are trained to 300 epochs with default settings and hyperparameters (no autoaugmentation).

v2.0 with nn.LeakyReLU(0.1)

| Model | APval | APtest | AP50 | SpeedGPU | FPSGPU | params | FLOPS | |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 36.1 | 36.1 | 55.3 | 2.2ms | 476 | 7.5M | 13.2B | |

| YOLOv5m | 43.5 | 43.5 | 62.5 | 3.2ms | 333 | 21.8M | 39.4B | |

| YOLOv5l | 47.0 | 47.1 | 65.6 | 4.1ms | 256 | 47.8M | 88.1B | |

| YOLOv5x | 49.0 | 49.0 | 67.4 | 6.4ms | 164 | 89.0M | 166.4B | |

| YOLOv5x + TTA | 50.4 | 50.4 | 68.5 | 23.4ms | 43 | 89.0M | 354.3B | |

| YOLOv3-SPP | 45.6 | 45.5 | 65.2 | 4.5ms | 222 | 63.0M | 118.0B |

** APtest denotes COCO test-dev2017 server results, all other AP results in the table denote val2017 accuracy.

** All AP numbers are for single-model single-scale without ensemble or test-time augmentation. Reproduce by python test.py --data coco.yaml --img 672 --conf 0.001

** SpeedGPU measures end-to-end time per image averaged over 5000 COCO val2017 images using a GCP n1-standard-16 instance with one V100 GPU, and includes image preprocessing, PyTorch FP16 image inference at --batch-size 32 --img-size 640, postprocessing and NMS. Average NMS time included in this chart is 1-2ms/img. Reproduce by python test.py --data coco.yaml --img 640 --conf 0.1

** All checkpoints are trained to 300 epochs with default settings and hyperparameters (no autoaugmentation).